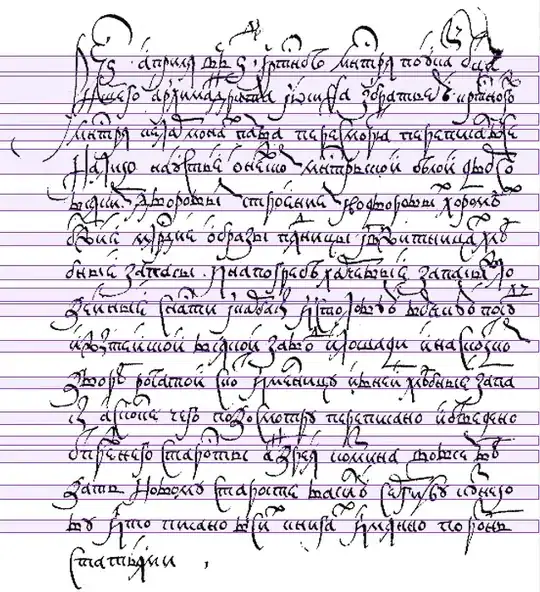

I want to automatically divide an image of ancient handwritten text by lines (and by words in future).

The first obvious part is preprocessing the image...

I'm just using a simple digitization (based on brightness of pixel). After that I store data into two-dimensional array.

The next obvious part is analyzing the binary array.

My first algorithm was pretty simple - if there are more black pixels in a row of the array than the root-mean-square of Maximum and Minimum value, then this row is part of line.

After forming the list of lines I cut off lines with height that is less than average. Finally it turned out into some kind of linear regression, trying to minimize the difference between the blank rows and text rows. (I assumed that fact)

My second attempt - I tried to use GA with several fitness functions. The chromosome contained 3 values - xo, x1, x2. xo [-1;0] x1 [0;0.5] x2 [0;0.5]

Function, that determines identity the row to line is (xo + α1 x1 + α2 x2) > 0, where α1 is scaled sum of black pixels in row, α2 is median value of ranges between the extreme black pixels in row. (a1,a2 [0,1])

Another functions, that I tried is (x1 < α1 OR x2 > α2) and (1/xo + [a1 x1] / [a2 x2] ) > 0

The last function is the most efficient.

The fitness function is

(1 / (HeigthRange + SpacesRange)

The fitness function is

(1 / (HeigthRange + SpacesRange)

Where range is difference between maximum and minimum. It represents the homogeneity of text. The global optimum of this function - the most smooth way to divide the image into lines.

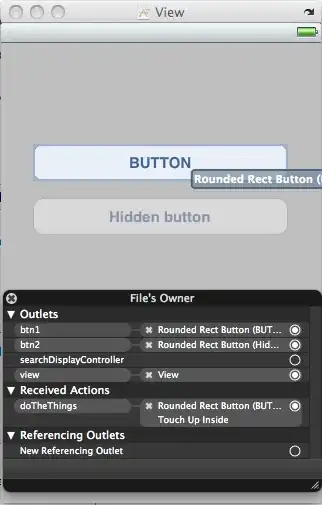

I am using C# with my self-coded GA (classical, with 2-point crossover, gray-code chromosomes, maximum population is 40, mutation rate is 0.05)

Now I ran out of ideas how to divide this image into lines with ~100% accuracy.

What is the efficient algorithm to do this?

UPDATE: Original BMP (1.3 MB)

UPDATE2:

Improved results on this text to 100%

How I did it:

- fixed minor bug in range count

- changed fitness function to 1/(distancesRange+1)*(heightsRange+1))

- minimized classifying function to (1/xo + x2/range) > 0 (points in row now don't affect classification) (i.e. optimized input data and made fitness function optimizations more explicit)

Problem:

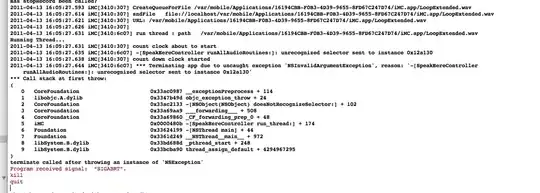

GA surprisingly failed to recognize this line. I looked at debug data of 'find rages' function and found, that there is too much noise in 'unrecognized' place. The function code is below:

public double[] Ranges()

{

var ranges = new double[_original.Height];

for (int y = 0; y < _original.Height; y++ )

{

ranges[y] = 0;

var dx = new List<int>();

int last = 0;

int x = 0;

while (last == 0 && x<_original.Width)

{

if (_bit[x, y])

last = x;

x++;

}

if (last == 0)

{

ranges[y] = 0;

continue;

}

for (x = last; x<_original.Width; x++)

{

if (!_bit[x, y]) continue;

if (last != x - 1)

{

dx.Add((x-last)+1);

}

last = x;

}

if (dx.Count > 2)

{

dx.Sort();

ranges[y] = dx[dx.Count / 2];

//ranges[y] = dx.Average();

}

else

ranges[y] = 0;

}

var maximum = ranges.Max();

for (int i = 0; i < ranges.Length; i++)

{

if (Math.Abs(ranges[i] - 0) < 0.9)

ranges[i] = maximum;

}

return ranges;

}

I'm using some hacks in this code. The main reason - I want to minimize the range between nearest black pixels, but if there are no pixels, the value becomes '0', and it becomes impossible to solve this problem with finding optimas. The second reason - this code is changing too frequently. I'll try to fully change this code, but I have no idea how to do it.

Q:

- If there is more efficient fitness function?

- How to find more versatile determination function?