I wish to construct a pipeline using multiple streams. Below is the code I have written:

using namespace std;

__global__ void vecAdd(float *c, const float *a, const float *b);

void initBuffer(float *data, int size);

int main() {

int size = 1 << 22;

int bufsize = size * sizeof(float);

int nStream = 4;

float* ha[4];

float* hb[4];

float* hc[4];

float* da[4];

float* db[4];

float* dc[4];

srand(2019);

for (int i = 0; i < 4; ++i) {

cudaMallocHost((void **) &ha[i], bufsize);

cudaMallocHost((void **) &hb[i], bufsize);

cudaMallocHost((void **) &hc[i], bufsize);

initBuffer(ha[i], size);

initBuffer(hb[i], size);

cudaMalloc((void **) &da[i], bufsize);

cudaMalloc((void **) &db[i], bufsize);

cudaMalloc((void **) &dc[i], bufsize);

}

auto *streams = new cudaStream_t[nStream];

for (int i = 0; i < nStream; i++) {

cudaStreamCreate(&streams[i]);

}

for (int i = 0; i < nStream; i++) {

cudaMemcpyAsync(da[i], ha[i], bufsize, cudaMemcpyHostToDevice, streams[i]);

cudaMemcpyAsync(db[i], hb[i], bufsize, cudaMemcpyHostToDevice, streams[i]);

dim3 dimBlock(256);

dim3 dimGrid(size / dimBlock.x);

vecAdd<<< dimGrid, dimBlock, 0, streams[i] >>>(dc[i], da[i], db[i]);

cudaMemcpyAsync(hc[i], dc[i], bufsize, cudaMemcpyDeviceToHost, streams[i]);

}

cudaDeviceSynchronize();

// terminate operators

delete[] streams;

for (int i = 0; i < 4; ++i) {

// terminate device memories

cudaFree(da[i]);

cudaFree(db[i]);

cudaFree(dc[i]);

// terminate host memories

cudaFreeHost(ha[i]);

cudaFreeHost(hb[i]);

cudaFreeHost(hc[i]);

}

return 0;

}

void initBuffer(float *data, const int size) {

for (int i = 0; i < size; i++)

data[i] = rand() / (float) RAND_MAX;

}

__global__ void vecAdd(float *c, const float *a, const float *b) {

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

for (int i = 0; i < 200; i++)

c[idx] = a[idx] + b[idx];

}

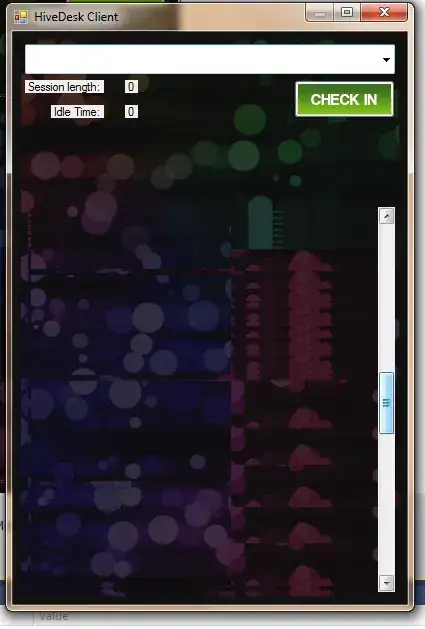

However, as shown in the diagram below, when I analyze it using Nsight Systems, it appears to be executed in a serial manner.

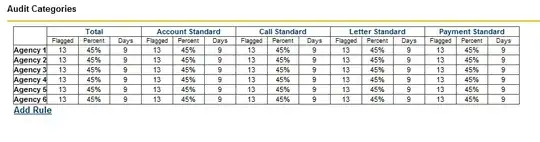

However, when I modify the code as shown below and rewrite the section related to D2H in a loop, it forms a pipeline. Why does this occur?

auto *streams = new cudaStream_t[nStream];

for (int i = 0; i < nStream; i++) {

cudaStreamCreate(&streams[i]);

}

for (int i = 0; i < nStream; i++) {

cudaMemcpyAsync(da[i], ha[i], bufsize, cudaMemcpyHostToDevice, streams[i]);

cudaMemcpyAsync(db[i], hb[i], bufsize, cudaMemcpyHostToDevice, streams[i]);

dim3 dimBlock(256);

dim3 dimGrid(size / dimBlock.x);

vecAdd<<< dimGrid, dimBlock, 0, streams[i] >>>(dc[i], da[i], db[i]);

// cudaMemcpyAsync(hc[i], dc[i], bufsize, cudaMemcpyDeviceToHost, streams[i]);

}

for (int i = 0; i < nStream; ++i)

cudaMemcpyAsync(hc[i], dc[i], bufsize, cudaMemcpyDeviceToHost, streams[i]);

cudaDeviceSynchronize();

I would appreciate someone pointing out the reason behind the inability of my initial code segment to form a pipeline.

I appreciate your feedback. After considering your comments, I conducted tests on four different devices. Initially, I ran the code on a 3060 GPU with Windows. Subsequently, I performed tests on a 2080TI GPU with Linux, a 3090 GPU with Linux, and an A100 GPU with Linux. Surprisingly, I discovered that the inability to establish a pipeline only occurred in the 3060-Windows environment. This discrepancy is quite perplexing. Here are the analysis results for each device:

- 3090-Linux: Pipeline successfully established.

- A100-Linux: Pipeline successfully established.