i'm facing some issues when trying to overlap computation and transferts on Windows (using VS2015 and CUDA 10.1). The code doesn't overlap at all. But the exact same code on Linux as the expected behaviour.

Here is the views from NVVP :

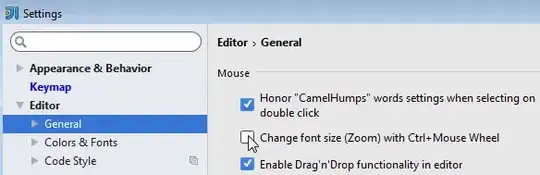

Windows 10 NVVP screenshot :

Linux NVVP screenshot :

Please note the following points :

- my host memory is PageLocked

- i'm using two different streams

- i'm using cudaMemcpyAsync method to transfert between host and device

- if i run my code on Linux, everything is fine

- i don't see anything in the documentation describing a different behaviour between there two systems.

So the question is the following :

Am i missing something ? Does it exists a way to achieve overlapping on this configuration (Windows 10 + 1080Ti)?

you can find some code here to reproduce the issue :

#include "cuda_runtime.h"

constexpr int NB_ELEMS = 64*1024*1024;

constexpr int BUF_SIZE = NB_ELEMS * sizeof(float);

constexpr int BLK_SIZE=1024;

using namespace std;

__global__

void dummy_operation(float* ptr1, float* ptr2)

{

const int idx = threadIdx.x + blockIdx.x * blockDim.x;

if(idx<NB_ELEMS)

{

float value = ptr1[idx];

for(int i=0; i<100; ++i)

{

value += 1.0f;

}

ptr2[idx] = value;

}

}

int main()

{

float *h_data1 = nullptr, *h_data2 = nullptr,

*h_data3 = nullptr, *h_data4 = nullptr;

cudaMallocHost(&h_data1, BUF_SIZE);

cudaMallocHost(&h_data2, BUF_SIZE);

cudaMallocHost(&h_data3, BUF_SIZE);

cudaMallocHost(&h_data4, BUF_SIZE);

float *d_data1 = nullptr, *d_data2 = nullptr,

*d_data3 = nullptr, *d_data4 = nullptr;

cudaMalloc(&d_data1, BUF_SIZE);

cudaMalloc(&d_data2, BUF_SIZE);

cudaMalloc(&d_data3, BUF_SIZE);

cudaMalloc(&d_data4, BUF_SIZE);

cudaStream_t st1, st2;

cudaStreamCreate(&st1);

cudaStreamCreate(&st2);

const dim3 threads(BLK_SIZE);

const dim3 blocks(NB_ELEMS / BLK_SIZE + 1);

for(int i=0; i<10; ++i)

{

float* tmp_dev_ptr = (i%2)==0? d_data1 : d_data3;

float* tmp_host_ptr = (i%2)==0? h_data1 : h_data3;

cudaStream_t tmp_st = (i%2)==0? st1 : st2;

cudaMemcpyAsync(tmp_dev_ptr, tmp_host_ptr, BUF_SIZE, cudaMemcpyDeviceToHost, tmp_st);

dummy_operation<<<blocks, threads, 0, tmp_st>>>(tmp_dev_ptr, d_data2);

//cudaMempcyAsync(d_data2, h_data2);

}

cudaStreamSynchronize(st1);

cudaStreamSynchronize(st2);

return 0;

}