Full code also available in this notebook , runtime -> run all to reproduce the result.

import cv2

import matplotlib.pyplot as plt

import numpy as np

from PIL import Image

from scipy import interpolate

from scipy.spatial import distance

from shapely.geometry import LineString, GeometryCollection, MultiPoint

from skimage.morphology import skeletonize

from sklearn.decomposition import PCA

from warp import PiecewiseAffineTransform # https://raw.githubusercontent.com/TimSC/image-piecewise-affine/master/warp.py

# Helper functions

def extendline(line, length):

a = line[0]

b = line[1]

lenab = distance.euclidean(a, b)

cx = b[0] + ((b[0] - a[0]) / lenab * length)

cy = b[1] + ((b[1] - a[1]) / lenab * length)

return [cx, cy]

def XYclean(x, y):

xy = np.concatenate((x.reshape(-1, 1), y.reshape(-1, 1)), axis=1)

# make PCA object

pca = PCA(2)

# fit on data

pca.fit(xy)

# transform into pca space

xypca = pca.transform(xy)

newx = xypca[:, 0]

newy = xypca[:, 1]

# sort

indexSort = np.argsort(x)

newx = newx[indexSort]

newy = newy[indexSort]

# add some more points (optional)

f = interpolate.interp1d(newx, newy, kind='linear')

newX = np.linspace(np.min(newx), np.max(newx), 100)

newY = f(newX)

# #smooth with a filter (optional)

# window = 43

# newY = savgol_filter(newY, window, 2)

# return back to old coordinates

xyclean = pca.inverse_transform(np.concatenate((newX.reshape(-1, 1), newY.reshape(-1, 1)), axis=1))

xc = xyclean[:, 0]

yc = xyclean[:, 1]

return np.hstack((xc.reshape(-1, 1), yc.reshape(-1, 1))).astype(int)

def contour2skeleton(cnt):

x, y, w, h = cv2.boundingRect(cnt)

cnt_trans = cnt - [x, y]

bim = np.zeros((h, w))

bim = cv2.drawContours(bim, [cnt_trans], -1, color=255, thickness=cv2.FILLED) // 255

sk = skeletonize(bim > 0)

#####

skeleton_yx = np.argwhere(sk > 0)

skeleton_xy = np.flip(skeleton_yx, axis=None)

xx, yy = skeleton_xy[:, 0], skeleton_xy[:, 1]

skeleton_xy = XYclean(xx, yy)

skeleton_xy = skeleton_xy + [x, y]

return skeleton_xy

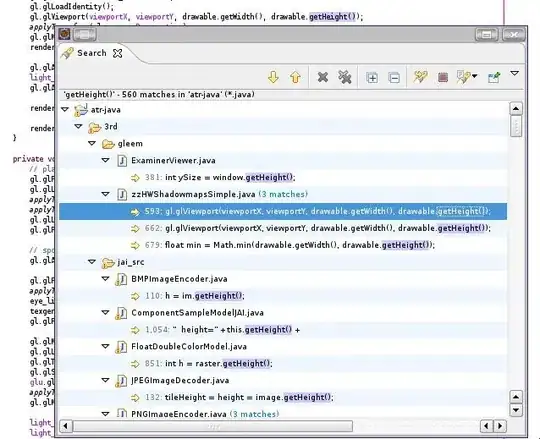

mm = cv2.imread('cont.png', cv2.IMREAD_GRAYSCALE)

plt.imshow(mm)

cnts, _ = cv2.findContours(mm.astype('uint8'), cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

cont = cnts[0].reshape(-1, 2)

# find skeleton

sk = contour2skeleton(cont)

mm = np.zeros_like(mm)

cv2.polylines(mm, [sk], False, 255, 2)

plt.imshow(mm)

# simplify the skeleton

ln = LineString(sk).simplify(2)

sk_simp = np.int0(ln.coords)

mm = np.zeros_like(mm)

for pt in sk_simp:

cv2.circle(mm, pt, 5, 255, -1)

plt.imshow(mm)

# extend both ends of the skeleton

print(len(sk_simp))

a, b = sk_simp[1], sk_simp[0]

c1 = np.int0(extendline([a, b], 50))

sk_simp = np.vstack([c1, sk_simp])

a, b = sk_simp[-2], sk_simp[-1]

c2 = np.int0(extendline([a, b], 50))

sk_simp = np.vstack([sk_simp, c2])

print(len(sk_simp))

cv2.circle(mm, c1, 10, 255, -1)

cv2.circle(mm, c2, 10, 255, -1)

plt.imshow(mm)

########

# find the target points

########

pts1 = sk_simp.copy()

dists = [distance.euclidean(p1, p2) for p1, p2 in zip(pts1[:-1], pts1[1:])]

zip1 = list(zip(pts1[:-1], dists))

# find the first 2 target points

a = pts1[0]

b = a - (dists[0], 0)

pts2 = [a, b, ]

for z in zip1[1:]:

lastpt = pts2[-1]

pt, dst = z

ln = [a, lastpt]

c = extendline(ln, dst)

pts2.append(c)

pts2 = np.int0(pts2)

ln1 = LineString(pts1)

ln2 = LineString(pts2)

GeometryCollection([ln1.buffer(5), ln2.buffer(5),

MultiPoint(pts2), MultiPoint(pts1)])

########

# create translated copies of source and target points

# 50 is arbitary

pts1 = np.vstack([pts1 + [0, 50], pts1 + [0, -50]])

pts2 = np.vstack([pts2 + [0, 50], pts2 + [0, -50]])

MultiPoint(pts1)

########

# performing the warping

im = Image.open('orig.png')

dstIm = Image.new(im.mode, im.size, color=(255, 255, 255))

# Perform transform

PiecewiseAffineTransform(im, pts1, dstIm, pts2)

plt.figure(figsize=(10, 10))

plt.imshow(dstIm)

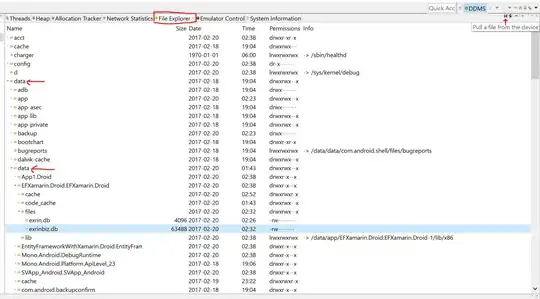

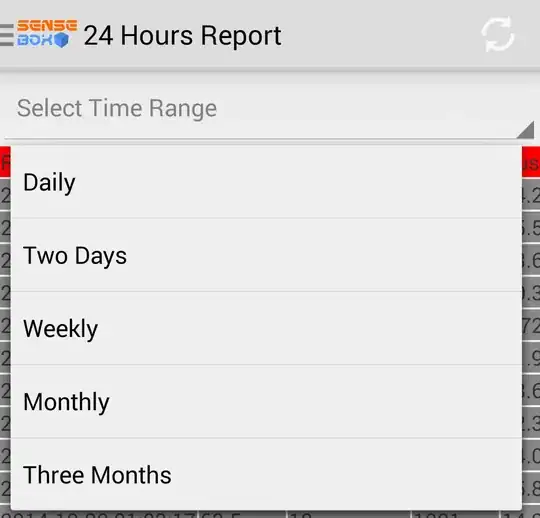

1- find medial axis , e.g using skimage.morphology.skeletonize and simplify it ,e.g using shapely object.simplify , I used a tolerance of 2 , the medial axis points are in white:

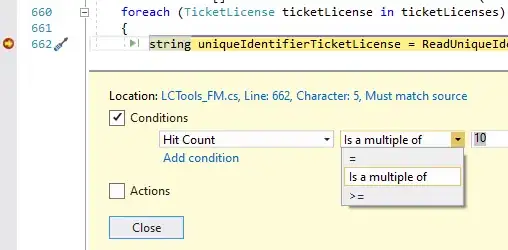

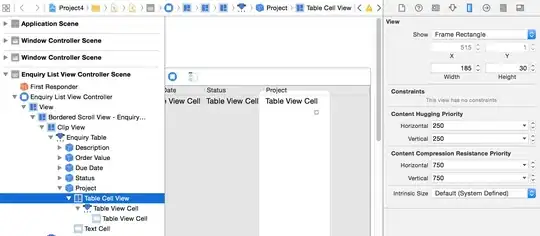

2- find the corresponding points on a straight line, using the distance between each point and the next:

3 - also added extra points on the ends, colored blue, so that the points fit the entire contour length

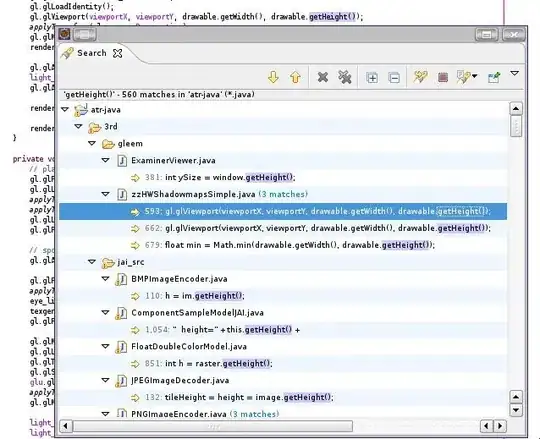

4- create 2 copies of the source and target points, one copy translated up and the other translated down (I choose an offset of 50 here), so the source points are now like this, please note that simple upward/downward displacement may not be the best approach for all contours, e.g if the contour is curving with degrees > 45:

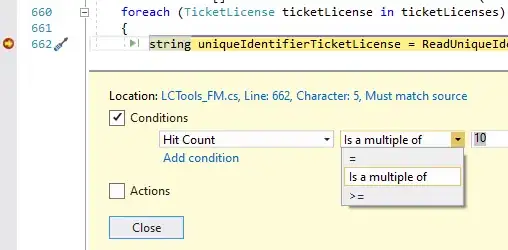

5- using the code here , perform PiecewiseAffineTransform using the source and target points, here's the result, it's straight enough: