I have an image(Image.size = (328, 231)) of a table and the bounding boxes of text on that image stored in a dictionary.

The contents of the dictionary are

{"bounding_boxes": [{"class": "text", "box": [0.0, 0.0, 53.94900093453785, 18.455579291781646]}, {"class": "text", "box": [53.94900093453785, 0.0, 184.96800320412976, 51.735807141009545]}, {"class": "text", "box": [184.96800320412976, 0.0, 256.199368074407, 51.735807141009545]}, {"class": "text", "box": [256.199368074407, 0.0, 328.0, 51.735807141009545]}, {"class": "text", "box": [0.0, 18.455579291781646, 53.94900093453785, 76.14130756377666]}, {"class": "text", "box": [53.94900093453785, 51.735807141009545, 184.96800320412976, 76.14130756377666]}, {"class": "text", "box": [184.96800320412976, 51.735807141009545, 256.199368074407, 76.14130756377666]}, {"class": "text", "box": [256.199368074407, 51.735807141009545, 328.0, 76.14130756377666]}, {"class": "text", "box": [0.0, 76.14130756377666, 53.94900093453785, 105.33448988766077]}, {"class": "text", "box": [53.94900093453785, 76.14130756377666, 184.96800320412976, 115.66887643031575]}, {"class": "text", "box": [184.96800320412976, 76.14130756377666, 256.199368074407, 115.66887643031575]}, {"class": "text", "box": [256.199368074407, 76.14130756377666, 328.0, 115.66887643031575]}, {"class": "text", "box": [0.0, 105.33448988766077, 53.94900093453785, 146.28279243942194]}, {"class": "text", "box": [53.94900093453785, 115.66887643031575, 184.96800320412976, 146.28279243942194]}, {"class": "text", "box": [184.96800320412976, 115.66887643031575, 256.199368074407, 146.28279243942194]}, {"class": "text", "box": [256.199368074407, 115.66887643031575, 328.0, 146.28279243942194]}, {"class": "text", "box": [0.0, 146.28279243942194, 53.94900093453785, 175.9430656804882]}, {"class": "text", "box": [53.94900093453785, 146.28279243942194, 184.96800320412976, 201.86661158409726]}, {"class": "text", "box": [184.96800320412976, 146.28279243942194, 256.199368074407, 201.86661158409726]}, {"class": "text", "box": [256.199368074407, 146.28279243942194, 328.0, 201.86661158409726]}, {"class": "text", "box": [0.0, 175.9430656804882, 53.94900093453785, 231.0]}, {"class": "text", "box": [53.94900093453785, 201.86661158409726, 184.96800320412976, 219.67445280166658]}, {"class": "text", "box": [184.96800320412976, 201.86661158409726, 256.199368074407, 219.67445280166658]}, {"class": "text", "box": [256.199368074407, 201.86661158409726, 328.0, 219.67445280166658]}, {"class": "text", "box": [53.94900093453785, 219.67445280166658, 184.96800320412976, 231.0]}, {"class": "text", "box": [184.96800320412976, 219.67445280166658, 256.199368074407, 231.0]}, {"class": "text", "box": [256.199368074407, 219.67445280166658, 328.0, 231.0]}]}

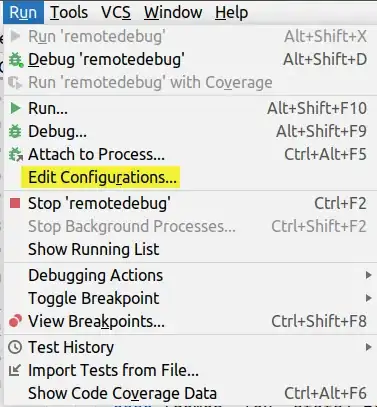

After plotting the bounding boxes in the given JSON data, the image looks like so,

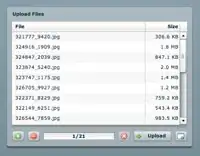

But, I want to shrink these boxes so that they cover the text tightly, like in the image below

I have tried cv2.boundingRect() with some image thresholding but didn't succeed. (source How to remove extra whitespace from image in opencv?)

data = <<The dictionary from above>>

new_boxes = list()

img = cv2.imread('sam.png')

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

gray = 255*(gray < 128).astype(np.uint8)

for item in data["bounding_boxes"]:

xmin = int(item['box'][0])

ymin = int(item['box'][1])

xmax = int(item['box'][2])

ymax = int(item['box'][3])

crop_img = gray[ymin:ymax, xmin:xmax]

coords = cv2.findNonZero(crop_img)

x, y, w, h = cv2.boundingRect(coords)

new_box = [x, y, w+x, h+y]

new_boxes.append(new_box)

Any suggestions are welcome. Thanks!