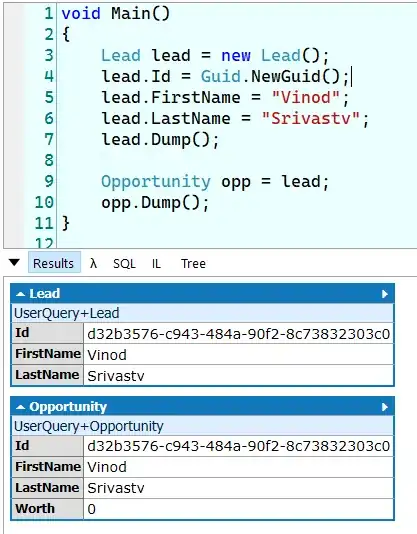

I'm having the following issue with Dask. I noticed that the same computations take longer and longer as time passes. After I restart scheduler, the computations are fast again, and just keep slowing down. The figure below shows the time consumed by my computations (I run them in a loop. The wider white spaces come from the fact that I run the loop a couple of times with breaks between them). I also noticed that the CPU usage of each worker goes down with time.

When I look at Dask dashboard, I see that CPU consumption by workers goes down with time - it starts with usually 90-100% in the first iterations and ends with 0-20% in last ones. Also the task stream seems much sparser in late iterations:

Reproducible example

I use dask container daskdev/dask-notebook (Python 3.8, dask 2021.4.0, dask-core 2021.4.0, distributed 2021.4.0, numpy 1.18.1, h5py 3.2.1). In that container I run 2 jupyter notebooks - one for running scheduler, and the other for running computations.

Prepare data

In one of the notebooks run the following to create data.

import h5py

import numpy as np

path = "/home/jovyan/data.h5"

x = np.random.randint(0, 2**15, size=(4096, 150000), dtype=np.int16)

with h5py.File(path, "w") as f:

f.create_dataset("/x", data=x, chunks=(32, 150000))

Run computations

In notebook 1 start the scheduler:

from dask.distributed import Client

c = Client()

c

My machine has 4 cores (8 threads) and 16GB ram, so this creates 4 workers with 2 threads and 3.8GB ram each.

In notebook 2 connect to that scheduler:

from dask.distributed import Client

address = "127.0.0.1:38835" # port based on output from notebook 1

c = Client(address, set_as_default=True)

and benchmark simple computation (load integers from disk, then compute fft on partitions, take absolute value, and finally sum over one of the axes):

from datetime import datetime

from time import sleep

import gc

import numpy as np

import h5py

import dask.array as da

def process(path):

f = h5py.File(path)

d = f['/x']

darr = da.from_array(d, chunks=d.chunks)

mapped = darr.map_blocks(np.fft.fft)

absolute = da.absolute(mapped)

reduced = absolute.mean(axis=-1)

return reduced.compute()

n_iterations = 20

log_file = "benchmark.csv"

delay_between_iterations = 5

path = "/home/jovyan/data.h5"

with open(log_file, "w") as file:

file.write("StartTime,ExecTime\n")

for i in range(n_iterations):

print(f"{i+1}th iteration")

gc.collect()

start = datetime.now()

process(path)

end = datetime.now()

exec_time = (end - start).total_seconds()

with open(log_file, "a") as f:

f.write(f"{start},{exec_time}\n")

sleep(delay_between_iterations)

The generated csv file is plotted above, in the beginning of this post.

Emitted warning messages

After I come back to the notebook 1 (with scheduler started) and stop scheduler c.close() I see a bunch of warnings:

distributed.utils_perf - WARNING - full garbage collections took 41% CPU time recently (threshold: 10%) distributed.utils_perf - WARNING - full garbage collections took 41% CPU time recently (threshold: 10%) distributed.utils_perf - WARNING - full garbage collections took 41% CPU time recently (threshold: 10%) distributed.utils_perf - WARNING - full garbage collections took 42% CPU time recently (threshold: 10%)

Then, when I restart, still in notebook 1, c = Client(), I see some warnings as below, and more of them appearing as computations in notebook 2 run.

/opt/conda/lib/python3.8/site-packages/bokeh/application/application.py:146: RuntimeWarning: coroutine '_needs_document_lock.._needs_document_lock_wrapper' was never awaited static_paths = {h.static_path() for h in self.handlers} Task was destroyed but it is pending! task: <Task pending name='Task-27802557' coro=<_needs_document_lock.._needs_document_lock_wrapper() running at /opt/conda/lib/python3.8/site-packages/bokeh/server/session.py:51> cb=[multi_future..callback() at /opt/conda/lib/python3.8/site-packages/tornado/gen.py:520]> Task was destroyed but it is pending! task: <Task pending name='Task-27802558' coro=<_needs_document_lock.._needs_document_lock_wrapper() running at /opt/conda/lib/python3.8/site-packages/bokeh/server/session.py:51> cb=[multi_future..callback() at /opt/conda/lib/python3.8/site-packages/tornado/gen.py:520]>

Additionally after executing some code in Notebook 1 (with scheduler), I keep getting warnings below previously run cell:

distributed.utils_perf - WARNING - full garbage collections took 43% CPU time recently (threshold: 10%) distributed.utils_perf - WARNING - full garbage collections took 43% CPU time recently (threshold: 10%) distributed.utils_perf - WARNING - full garbage collections took 43% CPU time recently (threshold: 10%)

Summary

My main problem is that the computations slow down. The emitted warnings may just be useful as a clues.

Thank you for your help in advance.

Possibly related post: Dask: why has CPU usage suddenly dropped?