I'm doing some Monte Carlo for a model and figured that Dask could be quite useful for this purpose. For the first 35 hours or so, things were running quite "smoothly" (apart from the fan noise giving a sense that the computer was taking off). Each model run would take about 2 seconds and there were 8 partitions running it in parallel. Activity monitor was showing 8 python3.6 instances.

However, the computer has become "silent" and CPU usage (as displayed in Spyder) hardly exceeds 20%. Model runs are happening sequentially (not in parallel) and taking about 4 seconds each. This happened today at some point while I was working on other things. I understand that depending on the sequence of actions, Dask won't use all cores at the same time. However, in this case there is really just one task to be performed (see further below), so one could expect all partitions to run and finish more or less simultaneously. Edit: the whole set up has run successfully for 10.000 simulations in the past, the difference now being that there are nearly 500.000 simulations to run.

Edit 2: now it has shifted to doing 2 partitions in parallel (instead of the previous 1 and original 8). It appears that something is making it change how many partitions are simultaneously processed.

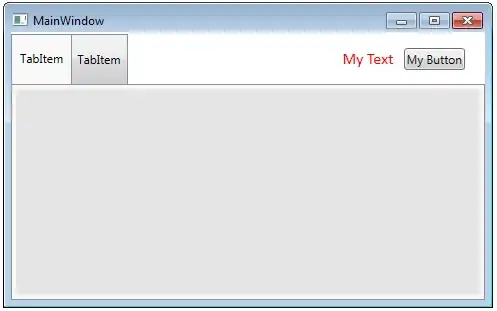

Edit 3: Following recommendations, I have used a dask.distributed.Client to track what is happening, and ran it for the first 400 rows. An illustration of what it looks like after completing is included below. I am struggling to understand the x-axis labels, hovering over the rectangles shows about 143 s.

Some questions therefore are:

- Is there any relationship between running other software (Chrome, MS Word) and having the computer "take back" some CPU from python?

- Or instead, could it be related to the fact that at some point I ran a second Spyder instance?

- Or even, could the computer have somehow run out of memory? But then wouldn't the command have stopped running?

- ... any other possible explanation?

- Is it possible to "tell" Dask to keep up the hard work and go back to using all CPU power while it is still running the original command?

- Is it possible to interrupt an execution and keep whichever calculations have already been performed? I have noticed that stopping the current command doesn't seem to do much.

- Is it possible to inquire on the overall progress of the computation while it is running? I would like to know how many model runs are left to have an idea of how long it would take to complete in this slow pace. I have tried using the ProgressBar in the past but it hangs on 0% until a few seconds before the end of the computations.

To be clear, uploading the model and the necessary data would be very complex. I haven't created a reproducible example either out of fear of making the issue worse (for now the model is still running at least...) and because - as you can probably tell by now - I have very little idea of what could be causing it and I am not expecting anyone to be able to reproduce it. I'm aware this is not best practice and apologise in advance. However, I would really appreciate some thoughts on what could be going on and possible ways to go about it, if anyone has been thorough something similar before and/or has experience with Dask.

Running: - macOS 10.13.6 (Memory: 16 GB | Processor: 2.5 GHz Intel Core i7 | 4 cores) - Spyder 3.3.1 - dask 0.19.2 - pandas 0.23.4

Please let me know if anything needs to be made clearer

If you believe it can be relevant, the main idea of the script is:

# Create a pandas DataFrame where each column is a parameter and each row is a possible parameter combination (cartesian product). At the end of each row some columns to store the respective values of some objective functions are pre-allocated too.

# Generate a dask dataframe that is the DataFrame above split into 8 partitions

# Define a function that takes a partition and, for each row:

# Runs the model with the coefficient values defined in the row

# Retrieves the values of objective functions

# Assigns these values to the respective columns of the current row in the partition (columns have been pre-allocated)

# and then returns the partition with columns for objective functions populated with the calculated values

# map_partitions() to this function in the dask dataframe

Any thoughts? This shows how simple the script is:

The dashboard:

Update: The approach I took was to:

- Set a large number of partitions (

npartitions=nCores*200). This made it much easier to visualise the progress. I'm not sure if setting so many partitions is good practice but it worked without much of a slowdown. - Instead of trying to get a single huge pandas DataFrame in the end by

.compute(), I got the dask dataframe to be written to Parquet (in this way each partition was written to a separate file). Later, reading all files into a dask dataframe andcomputeing it to a pandas DataFrame wasn't difficult, and if something went wrong in the middle at least I wouldn't lose the partitions that had been successfully processed and written.

This is what it looked like at a given point: