Summary/TL;DR: ML Object Detection application is unable to detect objects because images aren't being acquired by analyze() method.

BACKGROUND

I'm currently working on a mobile application using CameraX and Google ML Kit written in Java. The purpose of the application is to detect objects with a real time camera preview. I implemented ML Kit using this guide aptly titled "Detect and track objects with ML Kit on Android" (base model option) to detect objects in successive frames within the application.

However, upon running the application, it launches on my device and the camera preview continues to work but the application does not carry out its intended effect of actually detecting objects and displaying it on my screen. To attempt to resolve this, I had found this StackOverflow answer that very closely resembles this issue. To my dismay, the user had built their application using a custom model (tflite). This differs from mine as I am using the base model. According to my research, this uses the ML Kit's on-device's object detection. The code being applied is restricted to what is present within the aforementioned documentation. Since my IDE (Android Studio) does not display any errors within the syntax, I am unsure as to why any object detection does not appear to be present on my application. Displayed below is the necessary code that had been used:

CODE

public class MainActivity extends AppCompatActivity {

private ListenableFuture<ProcessCameraProvider> cameraProviderFuture;

private class YourAnalyzer implements ImageAnalysis.Analyzer {

@Override

@ExperimentalGetImage

public void analyze(ImageProxy imageProxy) {

Image mediaImage = imageProxy.getImage();

if (mediaImage != null) {

InputImage image =

InputImage.fromMediaImage(mediaImage, imageProxy.getImageInfo().getRotationDegrees());

//Pass image to an ML Kit Vision API

//...

ObjectDetectorOptions options =

new ObjectDetectorOptions.Builder()

.setDetectorMode(ObjectDetectorOptions.STREAM_MODE)

.enableClassification() // Optional

.build();

ObjectDetector objectDetector = ObjectDetection.getClient(options);

objectDetector.process(image)

.addOnSuccessListener(

new OnSuccessListener<List<DetectedObject>>() {

@Override

public void onSuccess(List<DetectedObject> detectedObjects) {

Log.d("TAG", "onSuccess" + detectedObjects.size());

for (DetectedObject detectedObject : detectedObjects) {

Rect boundingBox = detectedObject.getBoundingBox();

Integer trackingId = detectedObject.getTrackingId();

for (DetectedObject.Label label : detectedObject.getLabels()) {

String text = label.getText();

if (PredefinedCategory.FOOD.equals(text)) { }

int index = label.getIndex();

if (PredefinedCategory.FOOD_INDEX == index) { }

float confidence = label.getConfidence();

}

}

imageProxy.close();

}

}

)

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

Log.d("TAG", "onFailure" + e);

imageProxy.close();

}

}

);

}

}

}

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

cameraProviderFuture = ProcessCameraProvider.getInstance(this);

PreviewView previewView = findViewById(R.id.previewView);

cameraProviderFuture.addListener(() -> {

try {

ProcessCameraProvider cameraProvider = cameraProviderFuture.get();

bindPreview(cameraProvider);

} catch (ExecutionException | InterruptedException e) {}

}, ContextCompat.getMainExecutor(this));

}

void bindPreview(@NonNull ProcessCameraProvider cameraProvider) {

PreviewView previewView = findViewById(R.id.previewView);

Preview preview = new Preview.Builder()

.build();

CameraSelector cameraSelector = new CameraSelector.Builder()

.requireLensFacing(CameraSelector.LENS_FACING_BACK)

.build();

preview.setSurfaceProvider(previewView.getSurfaceProvider());

ImageAnalysis imageAnalysis =

new ImageAnalysis.Builder()

.setTargetResolution(new Size(1280,720))

.setBackpressureStrategy(ImageAnalysis.STRATEGY_KEEP_ONLY_LATEST)

.build();

imageAnalysis.setAnalyzer(ContextCompat.getMainExecutor(this), new YourAnalyzer());

Camera camera = cameraProvider.bindToLifecycle((LifecycleOwner)this, cameraSelector, preview, imageAnalysis);

}

}

END OBJECTIVE

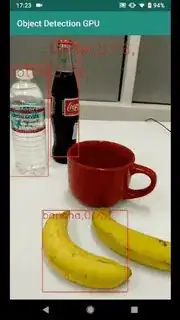

If any sort of visual example is required to understand what the intended effect should result in, Here it is included in the following image.

UPDATE [April 11, 2021]: After I attempted to debug by Log.d(..)ing the OnSuccess method in order to determine the return object list size, the AS console had printed D/TAG: onSuccess0 upwards of up to 30 times within a couple seconds of running the application. Would this mean that the application is not detecting any objects? This has bugged me since I had followed the documentation exactly.

UPDATE [MAY 1, 2021]: The line DetectedObject[] results = new DetectedObject[0]; was deleted from the onSuccess method.

for (DetectedObject detectedObject : results) now uses "detectedObjects" instead of "results" to reflect code present within the documentation. However, onSuccess is still logging D/TAG: onSuccess0, which further increases questions on why the method isn't acquiring any data whatsoever.