Interesting question. Like you note, there are a few examples of this that have been noted on Github and the official xgboost site:

There are also others who have posted similar questions:

Looking at the official xgboost documentation, there is an extensive section on GPU support.

There are a few things to check. The documentation notes that:

Tree construction (training) and prediction can be accelerated with

CUDA-capable GPUs.

1. Is your GPU CUDA enabled?

Yes, it is.

2. Are you using parameters that can be affected by GPU usage?

Keep in mind, only certain parameters benefit from using a GPU. Those are:

Yes, you are. Most of these are included in your hyperparameter set, which is a good thing.

{subsample, sampling_method, colsample_bytree, colsample_bylevel, max_bin, gamma, gpu_id, predictor, grow_policy, monotone_constraints, interaction_constraints, single_precision_histogram}

3. Are you configuring parameters to use GPU support?

If you look at the XGBoost Parameters page, you can find additional areas that may help with improving your times. For example, updater can be set to grow_gpu_hist, which (note, this is moot since you have tree_method set, but for notes):

grow_gpu_hist: Grow tree with GPU.

At the bottom of the parameters page, there are additional parameters for gpu_hist enabled, specifically deterministic_histogram (note, this is moot since this defaults to True):

Build histogram on GPU deterministically. Histogram building is not

deterministic due to the non-associative aspect of floating point

summation. We employ a pre-rounding routine to mitigate the issue,

which may lead to slightly lower accuracy. Set to false to disable it.

4. The data

I ran some interesting experiments with some data. Since I didn't have access to your data, I used sklearn's make_classification, which generates data in a rather robust way.

I made a few changes to your script but noticed no change: I changed hyperparameters on gpu vs cpu examples, I ran this 100 times and took average results, etc. Nothing seemed to stand out to me. I recalled that I once used XGBoost GPU vs CPU capabilities to speed up some analytics, however, I was working on a much bigger dataset.

I edited your script slightly to use this data, and also began changing the number of samples and features in the dataset (via n_samples and n_features parameters) to observe the effects on runtime. It appears as if a GPU will significantly improve training times for high dimensional data, but that bulk data with many samples does not see a huge improvement. See my script below:

import xgboost as xgb, numpy, time

from sklearn.datasets import make_classification

from sklearn.model_selection import train_test_split

xgb_gpu = []

xgbclassifier_gpu = []

xgb_cpu = []

xgbclassifier_cpu = []

n_samples = 75000

n_features = 500

for i in range(len(10)):

n_samples += 10000

n_features += 300

# Make my own data since I do not have the data from the SO question

X_train2, y_train = make_classification(n_samples=n_samples, n_features=n_features*0.9, n_informative=n_features*0.1,

n_redundant=100, flip_y=0.10, random_state=8)

# Keep script from OP intact

param = {'max_depth':5, 'objective':'binary:logistic', 'subsample':0.8,

'colsample_bytree':0.8, 'eta':0.5, 'min_child_weight':1,

'tree_method':'gpu_hist', 'gpu_id': 0

}

num_round = 100

dtrain = xgb.DMatrix(X_train2, y_train)

tic = time.time()

model = xgb.train(param, dtrain, num_round)

print('passed time with xgb (gpu): %.3fs'%(time.time()-tic))

xgb_gpu.append(time.time()-tic)

xgb_param = {'max_depth':5, 'objective':'binary:logistic', 'subsample':0.8,

'colsample_bytree':0.8, 'learning_rate':0.5, 'min_child_weight':1,

'tree_method':'gpu_hist', 'gpu_id':0}

model = xgb.XGBClassifier(**xgb_param)

tic = time.time()

model.fit(X_train2, y_train)

print('passed time with XGBClassifier (gpu): %.3fs'%(time.time()-tic))

xgbclassifier_gpu.append(time.time()-tic)

param = {'max_depth':5, 'objective':'binary:logistic', 'subsample':0.8,

'colsample_bytree':0.8, 'eta':0.5, 'min_child_weight':1,

'tree_method':'hist'}

num_round = 100

dtrain = xgb.DMatrix(X_train2, y_train)

tic = time.time()

model = xgb.train(param, dtrain, num_round)

print('passed time with xgb (cpu): %.3fs'%(time.time()-tic))

xgb_cpu.append(time.time()-tic)

xgb_param = {'max_depth':5, 'objective':'binary:logistic', 'subsample':0.8,

'colsample_bytree':0.8, 'learning_rate':0.5, 'min_child_weight':1,

'tree_method':'hist'}

model = xgb.XGBClassifier(**xgb_param)

tic = time.time()

model.fit(X_train2, y_train)

print('passed time with XGBClassifier (cpu): %.3fs'%(time.time()-tic))

xgbclassifier_cpu.append(time.time()-tic)

import pandas as pd

df = pd.DataFrame({'XGB GPU': xgb_gpu, 'XGBClassifier GPU': xgbclassifier_gpu, 'XGB CPU': xgb_cpu, 'XGBClassifier CPU': xgbclassifier_cpu})

#df.to_csv('both_results.csv')

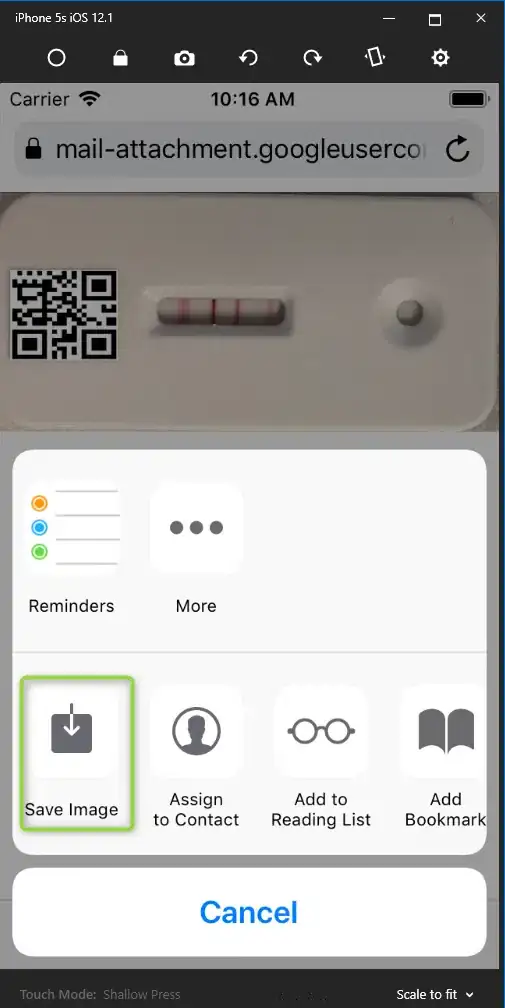

I ran this changing each (samples, features) separately, and together, on the same datasets. See results below:

| Interval | XGB GPU | XGBClassifier GPU | XGB CPU | XGBClassifier CPU | Metric |

|:--------:|:--------:|:-----------------:|:--------:|:-----------------:|:----------------:|

| 0 | 11.3801 | 12.00785 | 15.20124 | 15.48131 | Changed Features |

| 1 | 15.67674 | 16.85668 | 20.63819 | 22.12265 | Changed Features |

| 2 | 18.76029 | 20.39844 | 33.23108 | 32.29926 | Changed Features |

| 3 | 23.147 | 24.91953 | 47.65588 | 44.76052 | Changed Features |

| 4 | 27.42542 | 29.48186 | 50.76428 | 55.88155 | Changed Features |

| 5 | 30.78596 | 33.03594 | 71.4733 | 67.24275 | Changed Features |

| 6 | 35.03331 | 37.74951 | 77.68997 | 75.61216 | Changed Features |

| 7 | 39.13849 | 42.17049 | 82.95307 | 85.83364 | Changed Features |

| 8 | 42.55439 | 45.90751 | 92.33368 | 96.72809 | Changed Features |

| 9 | 46.89023 | 50.57919 | 105.8298 | 107.3893 | Changed Features |

| 0 | 7.013227 | 7.303488 | 6.998254 | 9.733574 | No Changes |

| 1 | 6.757523 | 7.302388 | 5.714839 | 6.805287 | No Changes |

| 2 | 6.753428 | 7.291906 | 5.899611 | 6.603533 | No Changes |

| 3 | 6.749848 | 7.293555 | 6.005773 | 6.486256 | No Changes |

| 4 | 6.755352 | 7.297607 | 5.982163 | 8.280619 | No Changes |

| 5 | 6.756498 | 7.335412 | 6.321188 | 7.900422 | No Changes |

| 6 | 6.792402 | 7.332112 | 6.17904 | 6.443676 | No Changes |

| 7 | 6.786584 | 7.311666 | 7.093638 | 7.811417 | No Changes |

| 8 | 6.7851 | 7.30604 | 5.574762 | 6.045969 | No Changes |

| 9 | 6.789152 | 7.309363 | 5.751018 | 6.213471 | No Changes |

| 0 | 7.696765 | 8.03615 | 6.175457 | 6.764809 | Changed Samples |

| 1 | 7.914885 | 8.646722 | 6.997217 | 7.598789 | Changed Samples |

| 2 | 8.489555 | 9.2526 | 6.899783 | 7.202334 | Changed Samples |

| 3 | 9.197605 | 10.02934 | 7.511708 | 7.724675 | Changed Samples |

| 4 | 9.73642 | 10.64056 | 7.918493 | 8.982463 | Changed Samples |

| 5 | 10.34522 | 11.31103 | 8.524865 | 9.403711 | Changed Samples |

| 6 | 10.94025 | 11.98357 | 8.697257 | 9.49277 | Changed Samples |

| 7 | 11.80717 | 12.93195 | 8.734307 | 10.79595 | Changed Samples |

| 8 | 12.18282 | 13.38646 | 9.175231 | 10.33532 | Changed Samples |

| 9 | 13.05499 | 14.33106 | 11.04398 | 10.50722 | Changed Samples |

| 0 | 12.43683 | 13.19787 | 12.80741 | 13.86206 | Changed Both |

| 1 | 18.59139 | 20.01569 | 25.61141 | 35.37391 | Changed Both |

| 2 | 24.37475 | 26.44214 | 40.86238 | 42.79259 | Changed Both |

| 3 | 31.96762 | 34.75215 | 68.869 | 59.97797 | Changed Both |

| 4 | 41.26578 | 44.70537 | 83.84672 | 94.62811 | Changed Both |

| 5 | 49.82583 | 54.06252 | 109.197 | 108.0314 | Changed Both |

| 6 | 59.36528 | 64.60577 | 131.1234 | 140.6352 | Changed Both |

| 7 | 71.44678 | 77.71752 | 156.1914 | 161.4897 | Changed Both |

| 8 | 81.79306 | 90.56132 | 196.0033 | 193.4111 | Changed Both |

| 9 | 94.71505 | 104.8044 | 215.0758 | 224.6175 | Changed Both |

No Change

Linearly Increasing Feature Count

Linearly Increasing Samples

Linearly Increasing Samples + Features

As I started to research more; this makes sense. GPUs are known to scale well with high dimensional data, and it would make sense you would see a training time improvement if your data was high dimensional. See the following examples:

Though we cannot say for sure without access to your data, it would seem that the hardware capabilities of a GPU enable significant performance increases when your data supports it, and it appears that might not be the case given the size and shape of the data you have.