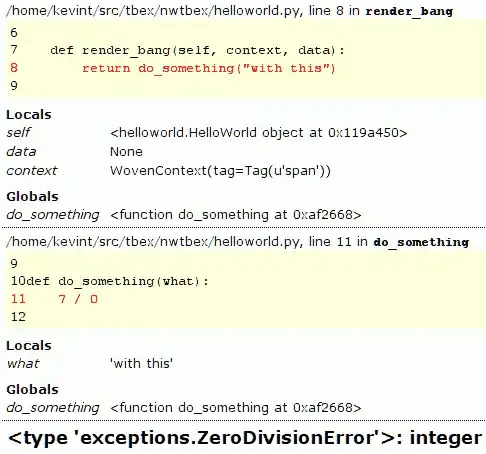

I have images from a SICK Trispector depth laser scanner. The image format is PNG. SICK calls it Trispector 2.5D PNG. The images contain both reflection data and depth data according to SICK's documentation. But SICK will not provide information on how to use this data without using their or partners' software. Essentially, what I need is the depth data. Reflection data might be a nice to have but is not necessary. The resulting image I get is monochrome. It seems to have the reflection data in the top part of the image and overlapping height data in the bottom. The scanned object is a crate of beer bottles with bottle caps. You can see an example here:

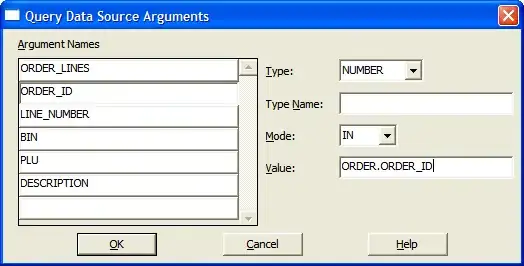

I have tried opening the image in many different image viewers and looked for information on 2.5D, but it does not appear to be relevant for this. In Matlab image preview I get one side of the height data individually, but I don't know how to use this information. See the following image from Matlab preview:

Does anyone know how to extrapolate the height data from an image like this? Maybe someone's worked with SICK's SOPAS or SICK scanners before, and understand this "2.5D PNG" format that SICK calls it. An OpenCV solution would be nice.

Edit: As @DanMašek comments, the problem is that of separating two images of different bitdepth from a single PNG. He provides further insight in the problem and a great OpenCV solution for separating the intensity and depth images as 8- and 16-bit, respectively: