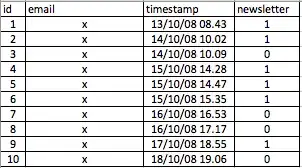

I'm using Python client library for loading data in BigQuery tables. I need to update some changed rows in those tables. But I couldn't figure out how to correctly update them? I want some similar UPSERT function - insert row only if its not exists, otherwise - update existing row.

Is it the right way to use a special field with checksum in tables (and compare sum in loading process)? If there is a good idea, how to solve this with Python client? (As I know, it can't update existing data)

Please explain me, what's the best practice?