I am writing JSON records into a BigQuery table using the function bq.insert_rows_json(f'{project}.{dataset}.{table_name}', rows_to_insert). This operation is done in INSERT mode. I was wondering if I could use the same function but in UPSERT mode. Is it possible ? I check the documentation here but did not find an argument for that.

- 262

- 2

- 16

-

Does this answer your question? [Google BQ - how to upsert existing data in tables?](https://stackoverflow.com/questions/48177241/google-bq-how-to-upsert-existing-data-in-tables) – Mr. Nobody Apr 30 '22 at 22:22

-

Not really because I beleive it would force me to expand each JSON record into the SQL query there described. I would like to know if there is a in-built python function for this UPSET operation of a JSON query. – FVCC May 02 '22 at 09:18

-

Can you provide sample data (that has a column for validation on uniqueness and sample json data) for proper replication of your use case? – Scott B May 03 '22 at 08:38

-

Yes, here is a sample record : { "type": "App", "name": "00239_pipeline_name", "version": "-SNAPSHOT", "description": "Data Pipeline Application", "artifact": { "name": "cdap-data-pipeline", "version": "6.5.1", "scope": "SYSTEM" } } – FVCC May 04 '22 at 14:18

1 Answers

I can't seem to find an in-built UPSERT function for python. However, you may try and consider the below approach which is derived from the comment of @Mr.Nobody.

from google.cloud import bigquery

client = bigquery.Client()

query_job = client.query(

"""

MERGE my-dataset.json_table T

USING my-dataset.json_table_source S

ON T.int64_field_0 = S.int64_field_0

WHEN MATCHED THEN

UPDATE SET string_field_1 = s.string_field_1

WHEN NOT MATCHED THEN

INSERT (int64_field_0, string_field_1) VALUES(int64_field_0, string_field_1)"""

)

results = query_job.result() # Waits for job to complete.

In this approach, you will be needing to ingest all of your supposedly "updated" JSON data on a table before inserting or updating them to your main BigQuery table. The query then will match each rows to the main table if the primary ID (uniqueness checker) is already there (then query will do UPDATE) or not yet (then query will do INSERT).

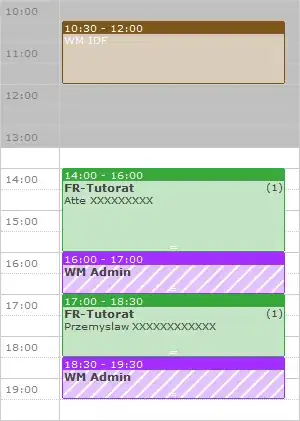

Screenshot of both tables before running the python code.

Screenshot of the Main Table when the python code finished executing.

Conclusion: The int64_field_0 4 was updated (from version 1.0.0. to 6.5.1) because it is already existing in the Main table. The int64_field_0 5 was inserted because it is not yet existing on the main table.

- 2,516

- 1

- 8

- 15