I have read An Introduction to the Intel® QuickPath Interconnect. The document does not mention that QPI is used by processors to access memory. So I think that processors don't access memory through QPI.

Is my understanding correct?

I have read An Introduction to the Intel® QuickPath Interconnect. The document does not mention that QPI is used by processors to access memory. So I think that processors don't access memory through QPI.

Is my understanding correct?

Intel QuickPath Interconnect (QPI) is not wired to the DRAM DIMMs and as such is not used to access the memory that connected to the CPU integrated memory controller (iMC).

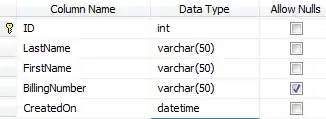

In the paper you linked this picture is present

That shows the connections of a processor, with the QPI signals pictured separately from the memory interface.

A text just before the picture confirm that QPI is not used to access memory

The processor also typically has one or more integrated memory controllers. Based on the level of scalability supported in the processor, it may include an integrated crossbar router and more than one Intel® QuickPath Interconnect port.

Furthermore, if you look at a typical datasheet you'll see that the CPU pins for accessing the DIMMs are not the ones used by QPI.

The QPI is however used to access the uncore, the part of the processor that contains the memory controller.

Courtesy of QPI article on Wikipedia

Courtesy of QPI article on Wikipedia

QPI is a fast internal general purpose bus, in addition to giving access to the uncore of the CPU it gives access to other CPUs' uncore. Due to this link, every resource available in the uncore can potentially be accessed with QPI, including the iMC of a remote CPU.

QPI define a protocol with multiple message classes, two of them are used to read memory using another CPU iMC.

The flow use a stack similar to the usual network stack.

Thus the path to remote memory include a QPI segment but the path to local memory doesn't.

Update

For Xeon E7 v3-18C CPU (designed for multi-socket systems), the Home agent doesn't access the DIMMS directly instead it uses an Intel SMI2 link to access the Intel C102/C104 Scalable Memory Buffer that in turn accesses the DIMMS.

The SMI2 link is faster than the DDR3 and the memory controller implements reliability or interleaving with the DIMMS.

Initially the CPU used a FSB to access the North bridge, this one had the memory controller and was linked to the South bridge (ICH - IO Controller Hub in Intel terminology) through DMI.

Later the FSB was replaced by QPI.

Then the memory controller was moved into the CPU (using its own bus to access memory and QPI to communicate with the CPU).

Later, the North bridge (IOH - IO Hub in Intel terminology) was integrated into the CPU and was used to access the PCH (that now replaces the south bridge) and PCIe was used to access fast devices (like the external graphic controller).

Recently the PCH has been integrated into the CPU as well that now exposes only PCIe, DIMMs pins, SATAexpress and any other common internal bus.

As a rule of thumb the buses used by the processors are:

Yes, QPI is used to access all remote memory on multi-socket systems, and much of its design and performance is intended to support such access in a reasonable fashion (i.e., with latency and bandwidth not too much worse than local access).

Basically, most x86 multi-socket systems are lightly1 NUMA: every DRAM bank is attached to a the memory controller of a particular socket: this memory is then local memory for that socket, while the remaining memory (attached to some other socket) is remote memory. All access to remote memory goes over the QPI links, and on many systems2 that is fully half of all memory access and more.

So QPI is designed to be low latency and high bandwidth to make such access still perform well. Furthermore, aside from pure memory access, QPI is the link through which the cache coherence between sockets occurs, e.g., notifying the other socket of invalidations, lines which have transitioned into the shared state, etc.

1 That is, the NUMA factor is fairly low, typically less than 2 for latency and bandwidth.

2 E.g., with NUMA interleave mode on, and 4 sockets, 75% of your access is remote.