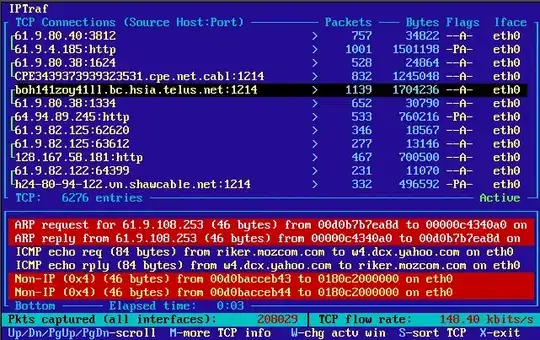

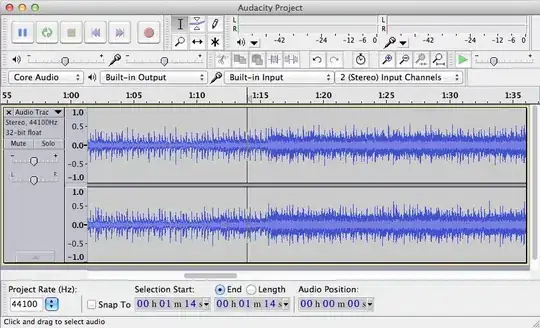

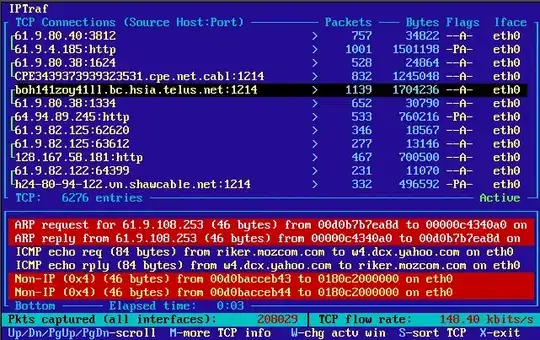

If I understand you properly, you want to extract out the blue ECG plot while removing the text and axes. The best way to do that would be to examine the HSV colour space of the image. The HSV colour space is great for discerning colours just like the way humans do. We can clearly see that there are two distinct colours in the image.

We can convert the image to HSV using rgb2hsv and we can examine the components separately. The hue component represents the dominant colour of the pixel, the saturation denotes the purity or how much white light there is in the pixel and the value represents the intensity or strength of the pixel.

Try visualizing each channel doing:

im = imread('https://i.stack.imgur.com/cFOSp.png'); %// Read in your image

hsv = rgb2hsv(im);

figure;

subplot(1,3,1); imshow(hsv(:,:,1)); title('Hue');

subplot(1,3,2); imshow(hsv(:,:,2)); title('Saturation');

subplot(1,3,3); imshow(hsv(:,:,3)); title('Value');

Hmm... well the hue and saturation don't help us at all. It's telling us the dominant colour and saturation are the same... but what sets them apart is the value. If you take a look at the image on the right, we can tell them apart by the strength of the colour itself. So what it's telling us is that the "black" pixels are actually blue but with almost no strength associated to it.

We can actually use this to our advantage. Any pixels whose values are above a certain value are the values we want to keep.

Try setting a threshold... something like 0.75. MATLAB's dynamic range of the HSV values are from [0-1], so:

mask = hsv(:,:,3) > 0.75;

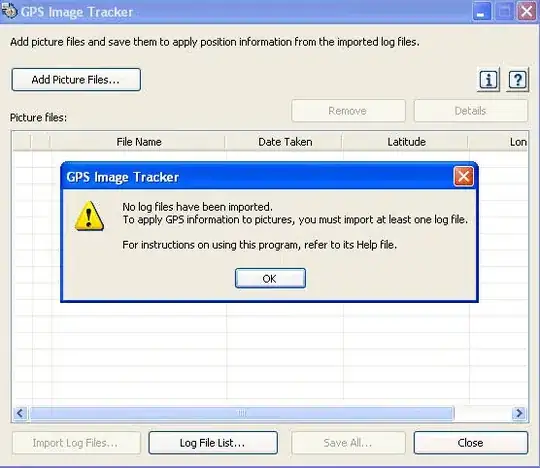

When we threshold the value component, this is what we get:

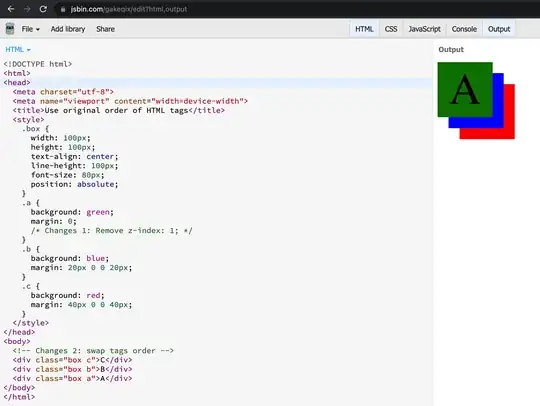

There's obviously a bit of quantization noise... especially around the axes and font. What I'm going to do next is perform a morphological erosion so that I can eliminate the quantization noise that's around each of the numbers and the axes. I'm going to make it the mask a bit large to ensure that I remove this noise. Using the image processing toolbox:

se = strel('square', 5);

mask_erode = imerode(mask, se);

We get this:

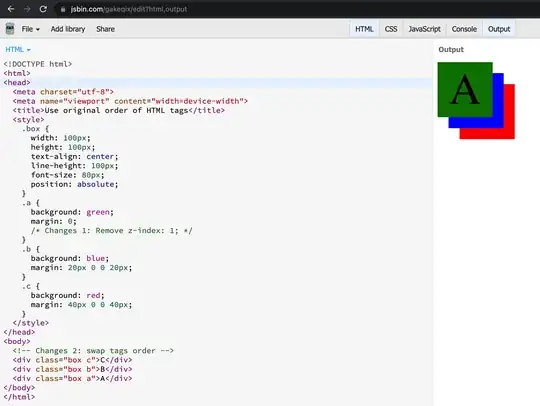

Great, so what I'm going to do now is make a copy of your original image, then set any pixel that is black from the mask I derived (above) to white in the final image. All of the other pixels should remain intact. This way, we can remove any text and the axes seen in your image:

im_final = im;

mask_final = repmat(mask_erode, [1 1 3]);

im_final(~mask_final) = 255;

I need to replicate the mask in the third dimension because this is a colour image and I need to set each channel to 255 simultaneously in the same spatial locations.

When I do that, this is what I get:

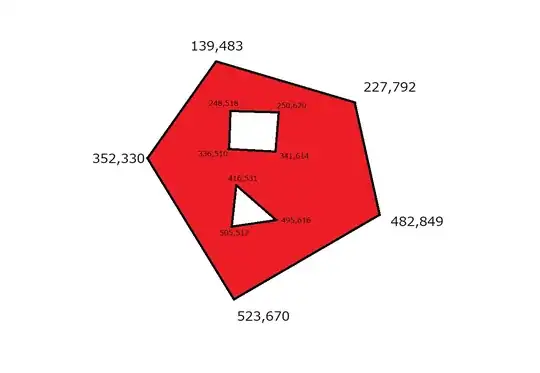

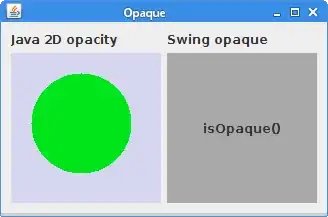

Now you'll notice that there are gaps in the graph.... which is to be expected due to quantization noise. We can do something further by converting this image to grayscale and thresholding the image, then filling joining the edges together by a morphological dilation. This is safe because we have already eliminated the axies and text. We can then use this as a mask to index into the original image to obtain our final graph.

Something like this:

im2 = rgb2gray(im_final);

thresh = im2 < 200;

se = strel('line', 10, 90);

im_dilate = imdilate(thresh, se);

mask2 = repmat(im_dilate, [1 1 3]);

im_final_final = 255*ones(size(im), class(im));

im_final_final(mask2) = im(mask2);

I threshold the previous image that we got without the text and axes after I convert it to grayscale, and then I perform dilation with a line structuring element that is 90 degrees in order to connect those lines that were originally disconnected. This thresholded image will contain the pixels that we ultimately need to sample from the original image so that we can get the graph data we need.

I then take this mask, replicate it, make a completely white image and then sample from the original image and place the locations we want from the original image in the white image.

This is our final image:

Very nice! I had to do all of that image processing because your image basically has quantization noise to begin with, so it's going to be a bit harder to get the graph entirely. Ander Biguri in his answer explained in more detail about colour quantization noise so certainly check out his post for more details.

However, as a qualitative measure, we can subtract this image from the original image and see what is remaining:

imshow(rgb2gray(abs(double(im) - double(im_final_final))));

We get:

So it looks like the axes and text are removed fine, but there are some traces in the graph that we didn't capture from the original image and that makes sense. It all has to do with the proper thresholds you want to select in order to get the graph data. There are some trouble spots near the beginning of the graph, and that's probably due to the morphological processing that I did. This image you provided is quite tricky with the quantization noise, so it's going to be very difficult to get a perfect result. Also, these thresholds unfortunately are all heuristic, so play around with the thresholds until you get something that agrees with you.

Good luck!