I have a very strange problem. I'm using this code to detect an image in another one (java opencv):

UPDATED CODE:

public void startRecognition() {

//load images, I want to find img_object in img_scene

Mat img_scene = Highgui.imread("D:/opencvws/ImageRecognition/src/main/resources/ascene.jpg");

Mat img_object = Highgui.imread("D:/opencvws/ImageRecognition/src/main/resources/aobj1.jpg");

run++;

System.out.println("RUN NO: " + run);

//init detector

FeatureDetector detector = FeatureDetector.create(FeatureDetector.SURF);

//keypoint detection for both images (keyponts_scene for img_scene, keypoint_object for img_object)

MatOfKeyPoint keypoints_object = new MatOfKeyPoint();

MatOfKeyPoint keypoints_scene = new MatOfKeyPoint();

detector.detect(img_object, keypoints_object);

detector.detect(img_scene, keypoints_scene);

System.out.println("OK: " + keypoints_object.total());

System.out.println("SK: " + keypoints_scene.total());

//extractor init

DescriptorExtractor extractor = DescriptorExtractor.create(2); //2 = SURF;

Mat descriptor_object = new Mat();

Mat descriptor_scene = new Mat() ;

//Compute descriptors

extractor.compute(img_object, keypoints_object, descriptor_object);

extractor.compute(img_scene, keypoints_scene, descriptor_scene);

//init matcher

DescriptorMatcher matcher = DescriptorMatcher.create(DescriptorMatcher.FLANNBASED); // 1 = FLANNBASED

matcher.clear();

MatOfDMatch matches = new MatOfDMatch();

//match both descriptors

matcher.match(descriptor_object, descriptor_scene, matches);

List<DMatch> matchesList = matches.toList();

//calc min/max dist

Double max_dist = 0.0;

Double min_dist = 100.0;

for(int i = 0; i < descriptor_object.rows(); i++){

Double dist = (double) matchesList.get(i).distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

//filter good matches

LinkedList<DMatch> good_matches = new LinkedList<DMatch>();

MatOfDMatch gm = new MatOfDMatch();

//good match = distance > 2*min_distance ==> put them in a list

for(int i = 0; i < descriptor_object.rows(); i++){

if(matchesList.get(i).distance < 2*min_dist){

good_matches.addLast(matchesList.get(i));

}

}

//List -> Mat

gm.fromList(good_matches);

//mat for resulting image

Mat img_matches = new Mat();

//filter keypoints (use only good matches); First in a List, iterate, afterwards ==> Mat

LinkedList<Point> objList = new LinkedList<Point>();

LinkedList<Point> sceneList = new LinkedList<Point>();

List<KeyPoint> keypoints_objectList = keypoints_object.toList();

List<KeyPoint> keypoints_sceneList = keypoints_scene.toList();

for(int i = 0; i<good_matches.size(); i++){

objList.addLast(keypoints_objectList.get(good_matches.get(i).queryIdx).pt);

sceneList.addLast(keypoints_sceneList.get(good_matches.get(i).trainIdx).pt);

}

MatOfPoint2f obj = new MatOfPoint2f();

obj.fromList(objList);

MatOfPoint2f scene = new MatOfPoint2f();

scene.fromList(sceneList);

//calc transformation matrix; method = 8 (RANSAC) ransacReprojThreshold=3

Mat hg = Calib3d.findHomography(obj, scene, 8,3);

//init corners

Mat obj_corners = new Mat(4,1,CvType.CV_32FC2);

Mat scene_corners = new Mat(4,1,CvType.CV_32FC2);

//obj

obj_corners.put(0, 0, new double[] {0,0});

obj_corners.put(1, 0, new double[] {img_object.cols(),0});

obj_corners.put(2, 0, new double[] {img_object.cols(),img_object.rows()});

obj_corners.put(3, 0, new double[] {0,img_object.rows()});

//transform obj corners to scene_img (stored in scene_corners)

Core.perspectiveTransform(obj_corners,scene_corners, hg);

//move points for img_obg width to the right to fit the matching image

Point p1 = new Point(scene_corners.get(0,0)[0]+img_object.cols(), scene_corners.get(0,0)[1]);

Point p2 = new Point(scene_corners.get(1,0)[0]+img_object.cols(), scene_corners.get(1,0)[1]);

Point p3 = new Point(scene_corners.get(2,0)[0]+img_object.cols(), scene_corners.get(2,0)[1]);

Point p4 = new Point(scene_corners.get(3,0)[0]+img_object.cols(), scene_corners.get(3,0)[1]);

//create the matching image

Features2d.drawMatches(

img_object,

keypoints_object,

img_scene,

keypoints_scene,

gm,

img_matches);

//draw lines to the matching image

Core.line(img_matches, p1 , p2, new Scalar(0, 255, 0),4);

Core.line(img_matches, p2, p3, new Scalar(0, 255, 0),4);

Core.line(img_matches, p3, p4, new Scalar(0, 255, 0),4);

Core.line(img_matches, p4, p1, new Scalar(0, 255, 0),4);

// resizing...

Mat resizeimage = new Mat();

Size sz = new Size(1200, 1000);

Imgproc.resize(img_matches, img_matches, sz);

panel1.setimagewithMat(img_matches);

frame1.repaint();

//tried to prevent any old references to mix up new calculation

matcher.clear();

img_matches = new Mat();

img_object = new Mat();

img_scene = new Mat();

keypoints_object = new MatOfKeyPoint();

keypoints_scene = new MatOfKeyPoint();

hg = new Mat();

}

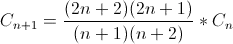

If I run the startRecognition methode twice (the opencv library is loaded at the startup) in my running application i get the same result for both recognitions. For the third try it detects other keypoints and calculates another transformation matrix (hg). Examples:

after 2nd try:

after 3rd:

Can anyone explain why? Or tell me how to prevent it? When I restart the whole program, it will again detect 2 times correct and afterwards varying. After several tries it will again calculate the correct hg (from the first and seceond try). I can't figure out why this is happending.

Thanks in advance

gemorra