first of all, I have to say I'm new to the field of computervision and I'm currently facing a problem, I tried to solve with opencv (Java Wrapper) without success.

Basicly I have a picture of a part from a Model taken by a camera (different angles, resoultions, rotations...) and I need to find the position of that part in the model.

Example Picture:

Model Picture:

Model Picture:

So one question is: Where should I start/which algorithm should I use?

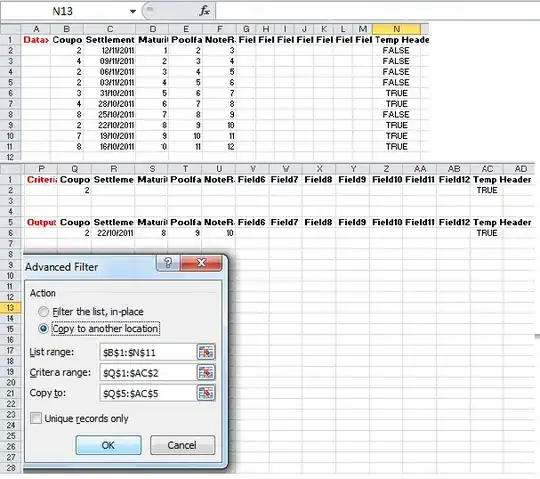

My first try was to use KeyPoint Matching with SURF as Detector, Descriptor and BF as Matcher. It worked for about 2 pcitures out of 10. I used the default parameters and tried other detectors, without any improvements. (Maybe it's a question of the right parameters. But how to find out the right parameteres combined with the right algorithm?...) Two examples:

My second try was to use the color to differentiate the certain elements in the model and to compare the structure with the model itself (In addition to the picture of the model I also have and xml representation of the model..). Right now I extraxted the color red out of the image, adjusted h,s,v values manually to get the best detection for about 4 pictures, which fails for other pictures.

Two examples:

I also tried to use edge detection (canny, gray, with histogramm Equalization) to detect geometric structures. For some results I could imagine, that it will work, but using the same canny parameters for other pictures "fails". Two examples:

As I said I'm not familiar with computervision and just tried out some algorithms. I'm facing the problem, that I don't know which combination of algorithms and techniques is the best and in addition to that which parameters should I use. Testing it manually seems to be impossible.

Thanks in advance

gemorra