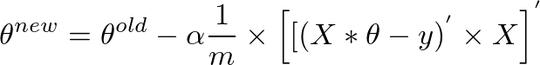

I implemented a gradient descent algorithm to minimize a cost function in order to gain a hypothesis for determining whether an image has a good quality. I did that in Octave. The idea is somehow based on the algorithm from the machine learning class by Andrew Ng

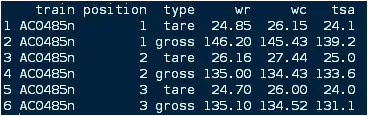

Therefore I have 880 values "y" that contains values from 0.5 to ~12. And I have 880 values from 50 to 300 in "X" that should predict the image's quality.

Sadly the algorithm seems to fail, after some iterations the value for theta is so small, that theta0 and theta1 become "NaN". And my linear regression curve has strange values...

here is the code for the gradient descent algorithm:

(theta = zeros(2, 1);, alpha= 0.01, iterations=1500)

function [theta, J_history] = gradientDescent(X, y, theta, alpha, num_iters)

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

for iter = 1:num_iters

tmp_j1=0;

for i=1:m,

tmp_j1 = tmp_j1+ ((theta (1,1) + theta (2,1)*X(i,2)) - y(i));

end

tmp_j2=0;

for i=1:m,

tmp_j2 = tmp_j2+ (((theta (1,1) + theta (2,1)*X(i,2)) - y(i)) *X(i,2));

end

tmp1= theta(1,1) - (alpha * ((1/m) * tmp_j1))

tmp2= theta(2,1) - (alpha * ((1/m) * tmp_j2))

theta(1,1)=tmp1

theta(2,1)=tmp2

% ============================================================

% Save the cost J in every iteration

J_history(iter) = computeCost(X, y, theta);

end

end

And here is the computation for the costfunction:

function J = computeCost(X, y, theta) %

m = length(y); % number of training examples

J = 0;

tmp=0;

for i=1:m,

tmp = tmp+ (theta (1,1) + theta (2,1)*X(i,2) - y(i))^2; %differenzberechnung

end

J= (1/(2*m)) * tmp

end