I am taking machine learning class in courseera. The machine learning is a pretty area for me. In first programming exercise I am having some difficulties in gradient decent algorithm. If anyone can help me I will be appreciate.

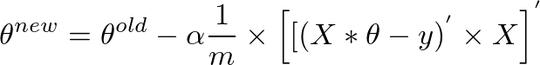

Here is the instructions for updating thetas;

"You will implement gradient descent in the file gradientDescent.m. The loop structure has been written for you, and you only need to supply the updates to θ within each iteration.

function [theta, J_history] = gradientDescent(X, y, theta, alpha, num_iters)

%GRADIENTDESCENT Performs gradient descent to learn theta

% theta = GRADIENTDESENT(X, y, theta, alpha, num_iters) updates theta by

% taking num_iters gradient steps with learning rate alpha

% Initialize some useful values

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

for iter = 1:num_iters

% ====================== YOUR CODE HERE ======================

% Instructions: Perform a single gradient step on the parameter vector

% theta.

%

% Hint: While debugging, it can be useful to print out the values

% of the cost function (computeCost) and gradient here.

%

% ============================================================

% Save the cost J in every iteration

J_history(iter) = computeCost(X, y, theta);

end

end

So here is what I did to update thetas simultaneously;

temp0 = theta(1,1) - (alpha/m)*sum((X*theta-y));

temp1 = theta(2,1) - (alpha/m)*sum((X*theta-y).*X);

theta(1,1) = temp0;

theta(2,1) = temp1;

I am getting error when I run this code. Can anyone help me please?