This is almost certainly not what your interviewers were looking for, but I'd've proposed it just to see what they said in response:

I'm assuming that all cards are the same size and are strictly rectangular with no holes, but that they are placed randomly in an X,Y sense and also oriented randomly in a theta sense. Therefore, each card is characterized by a triple (x,y,theta) or of course you also have your quad of corner locations. With this information, we can do a monte carlo analysis fairly simply.

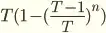

Simply generate a number of points at random on the surface of the table, and determine, by using the list, whether or not each point is covered by any card. If yes, keep it; if not, throw it out. Calculate the area of the cards by the ratio of kept points to total points.

Obviously, you can test each point in O(n) where n is the number of cards. However, there is a slick little technique that I think applies here, and I think will speed things up. You can grid out your table top with an appropriate grid size (related to the size of the cards) and pre-process the cards to figure out which grids they could possibly be in. (You can over-estimate by pre-processing the cards as though they were circular disks with a diameter going between opposite corners.) Now build up a hash table with the keys as grid locations and the contents of each being any possible card that could possibly overlap that grid. (Cards will appear in multiple grids.)

Now every time you need to include or exclude a point, you don't need to check each card, but only the pre-processed cards that could possibly be in your point's grid location.

There's a lot to be said for this method:

- You can pretty easily change it up to work with non-rectangular cards, esp if they're convex

- You can probably change it up to work with differently sized or shaped cards, if you have to (and in that case, the geometry really gets annoying)

- If you're interviewing at a place that does scientific or engineering work, they'll love it

- It parallelizes really well

- It's so cool!!

On the other hand:

- It's an approximation technique (but you can run to any precision you like!)

- You're in the land of expected runtimes, not deterministic runtimes

- Someone might actually ask you detailed questions about Monte Carlo

- If they're not familiar with Monte Carlo, they might think you're making stuff up

I wish I could take credit for this idea, but alas, I picked it up from a paper calculating surface areas of proteins based on the position and sizes of the atoms in the proteins. (Same basic idea, except now we had a 3D grid in 3-space, and the cards really were disks. We'd go through and for each atom, generate a bunch of points on its surface and see if they were or were not interior to any other atoms.)

EDIT: It occurs to me that the original problem stipulates that the total table area is much larger than the total card area. In this case, an appropriate grid size means that a majority of the grids must be unoccupied. You can also pre-process grid locations, once your hash table is built up, and eliminate those entirely, only generating points inside possibly occupied grid locations. (Basically, perform individual MC estimates on each potentially occluded grid location.)