Template Matching Approach

Here is a simple matchTemplate solution, that is similar to the approach that Guy Sirton mentions.

Template matching will work as long as you don't have much scaling or rotation occurring with your target.

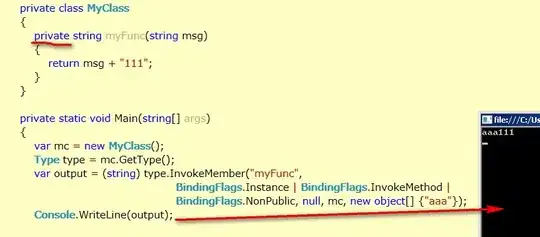

Here is the template that I used:

Here is the code I used to detect several of the unobstructed crosses:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

using namespace cv;

using namespace std;

int main(int argc, char* argv[])

{

string inputName = "crosses.jpg";

string outputName = "crosses_detect.png";

Mat img = imread( inputName, 1);

Mat templ = imread( "crosses-template.jpg", 1);

int resultCols = img.cols - templ.cols + 1;

int resultRows = img.rows - templ.rows + 1;

Mat result( resultCols, resultRows, CV_32FC1 );

matchTemplate(img, templ, result, CV_TM_CCOEFF);

normalize(result, result, 0, 255.0, NORM_MINMAX, CV_8UC1, Mat());

Mat resultMask;

threshold(result, resultMask, 180.0, 255.0, THRESH_BINARY);

Mat temp = resultMask.clone();

vector< vector<Point> > contours;

findContours(temp, contours, CV_RETR_EXTERNAL, CV_CHAIN_APPROX_SIMPLE, Point(templ.cols / 2, templ.rows / 2));

vector< vector<Point> >::iterator i;

for(i = contours.begin(); i != contours.end(); i++)

{

Moments m = moments(*i, false);

Point2f centroid(m.m10 / m.m00, m.m01 / m.m00);

circle(img, centroid, 3, Scalar(0, 255, 0), 3);

}

imshow("img", img);

imshow("results", result);

imshow("resultMask", resultMask);

imwrite(outputName, img);

waitKey(0);

return 0;

}

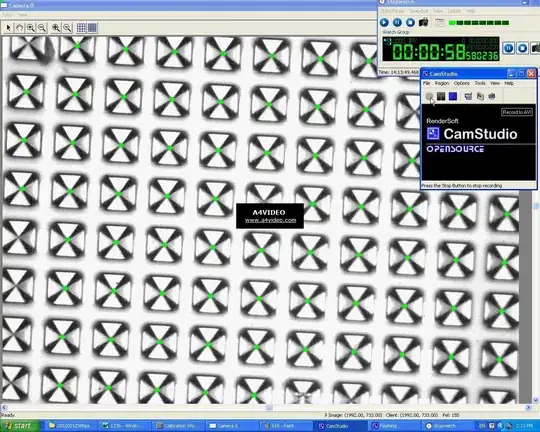

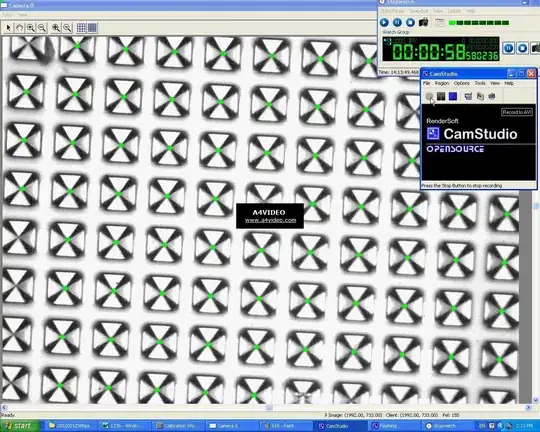

This results in this detection image:

This code basically sets a threshold to separate the cross peaks from the rest of the image, and then detects all of those contours. Finally, it computes the centroid of each contour to detect the center of the cross.

Shape Detection Alternative

Here is an alternative approach using triangle detection. It doesn't seems as accurate as the matchTemplate approach, but might be an alternative you could play with.

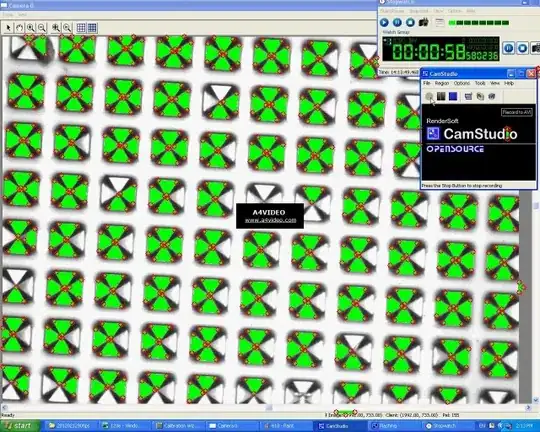

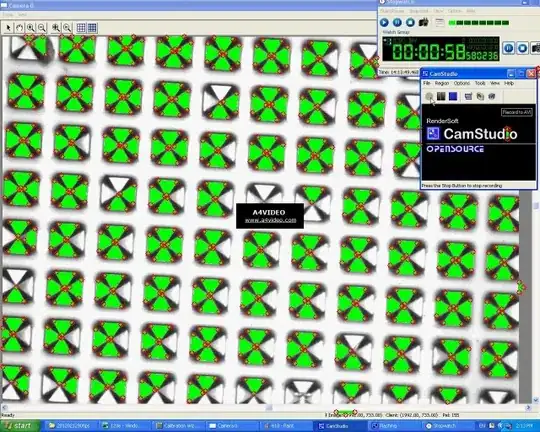

Using findContours we detect all the triangles in the image, which results in the following:

Then I noticed all the triangle vertices cluster near the cross center, so then these clusters are used to centroid the cross center point shown below:

Finally, here is the code that I used to do this:

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <iostream>

#include <list>

using namespace cv;

using namespace std;

vector<Point> getAllTriangleVertices(Mat& img, const vector< vector<Point> >& contours);

double euclideanDist(Point a, Point b);

vector< vector<Point> > groupPointsWithinRadius(vector<Point>& points, double radius);

void printPointVector(const vector<Point>& points);

Point computeClusterAverage(const vector<Point>& cluster);

int main(int argc, char* argv[])

{

Mat img = imread("crosses.jpg", 1);

double resizeFactor = 0.5;

resize(img, img, Size(0, 0), resizeFactor, resizeFactor);

Mat momentImg = img.clone();

Mat gray;

cvtColor(img, gray, CV_BGR2GRAY);

adaptiveThreshold(gray, gray, 255.0, ADAPTIVE_THRESH_MEAN_C, THRESH_BINARY, 19, 15);

imshow("threshold", gray);

waitKey();

vector< vector<Point> > contours;

findContours(gray, contours, CV_RETR_LIST, CV_CHAIN_APPROX_SIMPLE);

vector<Point> allTriangleVertices = getAllTriangleVertices(img, contours);

imshow("img", img);

imwrite("shape_detect.jpg", img);

waitKey();

printPointVector(allTriangleVertices);

vector< vector<Point> > clusters = groupPointsWithinRadius(allTriangleVertices, 10.0*resizeFactor);

cout << "Number of clusters: " << clusters.size() << endl;

vector< vector<Point> >::iterator cluster;

for(cluster = clusters.begin(); cluster != clusters.end(); ++cluster)

{

printPointVector(*cluster);

Point clusterAvg = computeClusterAverage(*cluster);

circle(momentImg, clusterAvg, 3, Scalar(0, 255, 0), CV_FILLED);

}

imshow("momentImg", momentImg);

imwrite("centroids.jpg", momentImg);

waitKey();

return 0;

}

vector<Point> getAllTriangleVertices(Mat& img, const vector< vector<Point> >& contours)

{

vector<Point> approxTriangle;

vector<Point> allTriangleVertices;

for(size_t i = 0; i < contours.size(); i++)

{

approxPolyDP(contours[i], approxTriangle, arcLength(Mat(contours[i]), true)*0.05, true);

if(approxTriangle.size() == 3)

{

copy(approxTriangle.begin(), approxTriangle.end(), back_inserter(allTriangleVertices));

drawContours(img, contours, i, Scalar(0, 255, 0), CV_FILLED);

vector<Point>::iterator vertex;

for(vertex = approxTriangle.begin(); vertex != approxTriangle.end(); ++vertex)

{

circle(img, *vertex, 3, Scalar(0, 0, 255), 1);

}

}

}

return allTriangleVertices;

}

double euclideanDist(Point a, Point b)

{

Point c = a - b;

return cv::sqrt(c.x*c.x + c.y*c.y);

}

vector< vector<Point> > groupPointsWithinRadius(vector<Point>& points, double radius)

{

vector< vector<Point> > clusters;

vector<Point>::iterator i;

for(i = points.begin(); i != points.end();)

{

vector<Point> subCluster;

subCluster.push_back(*i);

vector<Point>::iterator j;

for(j = points.begin(); j != points.end(); )

{

if(j != i && euclideanDist(*i, *j) < radius)

{

subCluster.push_back(*j);

j = points.erase(j);

}

else

{

++j;

}

}

if(subCluster.size() > 1)

{

clusters.push_back(subCluster);

}

i = points.erase(i);

}

return clusters;

}

Point computeClusterAverage(const vector<Point>& cluster)

{

Point2d sum;

vector<Point>::const_iterator point;

for(point = cluster.begin(); point != cluster.end(); ++point)

{

sum.x += point->x;

sum.y += point->y;

}

sum.x /= (double)cluster.size();

sum.y /= (double)cluster.size();

return Point(cvRound(sum.x), cvRound(sum.y));

}

void printPointVector(const vector<Point>& points)

{

vector<Point>::const_iterator point;

for(point = points.begin(); point != points.end(); ++point)

{

cout << "(" << point->x << ", " << point->y << ")";

if(point + 1 != points.end())

{

cout << ", ";

}

}

cout << endl;

}

I fixed a few bugs in my previous implementation, and cleaned the code up a bit. I also tested it with various resize factors, and it seemed to perform quite well. However, after I reached a quarter scale it started to have trouble properly detecting triangles, so this might not work well for extremely small crosses. Also, it appears there is a bug in the moments function as for some valid clusters it was returning (-NaN, -NaN) locations. So, I believe the accuracy is a good bit improved. It may need a few more tweaks, but overall I think it should be a good starting point for you.

I think my triangle detection would work better if the black border around the triangles was a bit thicker/sharper, and if there were less shadows on the triangles themselves.

Hope that helps!

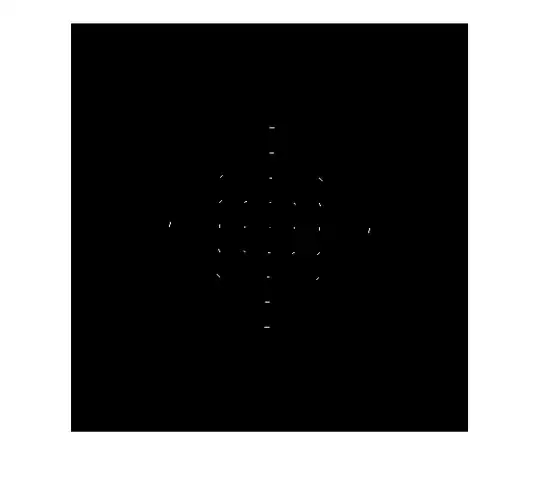

Image extracted from short video

Image extracted from short video Binary version with threshold set at 95

Binary version with threshold set at 95