In general this is task of author identification, and there are several good papers like this that may give you a lot of information. Here are my own suggestions on this topic.

1. User recognition/author identification itself

The most simple kind of text classification is classification by topic, and there you take meaningful words first of all. That is, if you want to distinguish text about Apple the company and apple the fruit, you count words like "eat", "oranges", "iPhone", etc., but you commonly ignore things like articles, forms of words, part-of-speech (POS) information and so on. However many people may talk about same topics, but use different styles of speech, that is articles, forms of words and all the things you ignore when classifying by topic. So the first and the main thing you should consider is collecting the most useful features for your algorithm. Author's style may be expressed by frequency of words like "a" and "the", POS-information (e.g. some people tend to use present time, others - future), common phrases ("I would like" vs. "I'd like" vs. "I want") and so on. Note that topic words should not be discarded completely - they still show themes the user is interested in. However you should treat them somehow specially, e.g. you can pre-classify texts by topic and then discriminate users not interested in it.

When you are done with feature collection, you may use one of machine learning algorithm to find best guess for an author of the text. As for me, 2 best suggestions here are probability and cosine similarity between text vector and user's common vector.

2. Discriminating common words

Or, in latest context, common features. The best way I can think of to get rid of the words that are used by all people more or less equally is to compute entropy for each such feature:

entropy(x) = -sum(P(Ui|x) * log(P(Ui|x)))

where x is a feature, U - user, P(Ui|x) - conditional probability of i-th user given feature x, and sum is the sum over all users.

High value of entropy indicates that distribution for this feature is close to uniform and thus is almost useless.

3. Data representation

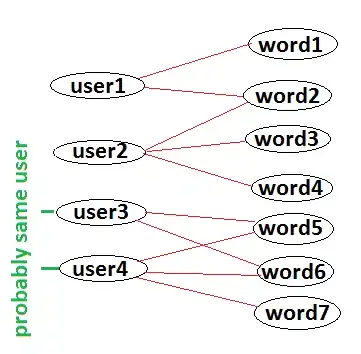

Common approach here is to have user-feature matrix. That is, you just build table where rows are user ids and columns are features. E.g. cell [3][12] shows normalized how many times user #3 used feature #12 (don't forget to normalize these frequencies by total number of features user ever used!).

Depending on features your are going to use and size of the matrix, you may want to use sparse matrix implementation instead of dense. E.g. if you use 1000 features and for every particular user around 90% of cells are 0, it doesn't make sense to keep all these zeros in memory and sparse implementation is better option.