I would like to apply OCR to some pictures of 7 segment displays on a wall. My strategy is the following:

- Covert Img to Grayscale

- Blur img to reduce false edges

- Threshold the img to a binary img

- Apply Canny Edge detection

- Set Region of Interest (ROI) base on a pattern given by the silhouette of the number

- Scale ROI and Template match the region

How to set a ROI so that my program doesn't have to look for the template through the whole image? I would like to set my ROI base on the number of edges found or something more useful if someone can help me.

I was looking into Cascade Classification and Haar but I don't know how to apply it to my problem.

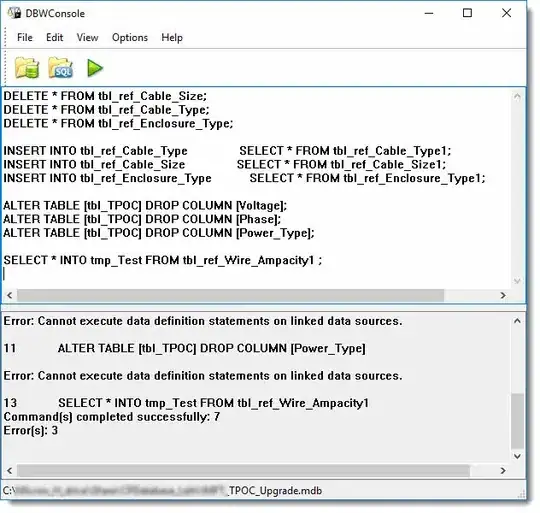

Here is an image after being pre-processed and edge detected:

original Image