We have a folder with 50 datafiles (next-gen DNA sequences) that need to be converted by running a python script on each one. The script takes 5 hours per file and it is single threaded and is largely CPU bound (the CPU core runs at 99% with minimal disk IO).

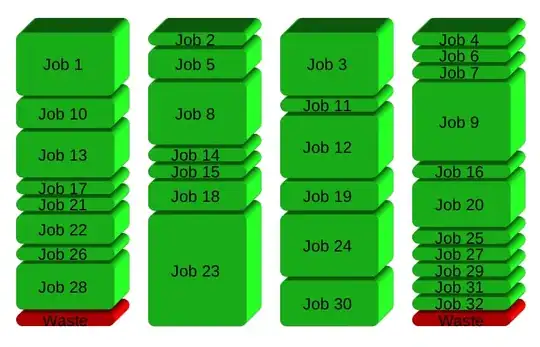

Since I have a 4 core machine, I'd like to run 4 instances of this script at once to vastly speed up the process.

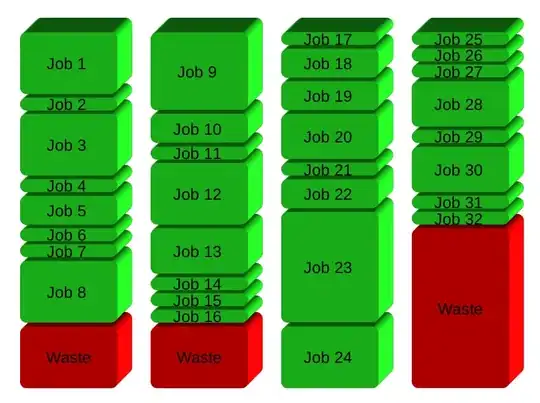

I guess I could split the data into 4 folders and in run the following bash script on each folder at the same time:

files=`ls -1 *`

for $file in $files;

do

out = $file+=".out"

python fastq_groom.py $file $out

done

But there must be a better way of running it on the one folder. We can use Bash/Python/Perl/Windows to do this.

(Sadly making the script multi threaded is beyond what we can do)

Using @phs xargs solution was the easiest way for us to solve the problem. We are however requesting the original developer implements @Björn answer. Once again thanks!