Why does degree symbol differ from UTF-8 from Unicode?

According to http://www.utf8-chartable.de/ and http://www.fileformat.info/info/unicode/char/b0/index.htm, Unicode is B0, but UTF-8 is C2 B0 How come?

Why does degree symbol differ from UTF-8 from Unicode?

According to http://www.utf8-chartable.de/ and http://www.fileformat.info/info/unicode/char/b0/index.htm, Unicode is B0, but UTF-8 is C2 B0 How come?

UTF-8 is a way to encode UTF characters using variable number of bytes (the number of bytes depends on the code point).

Code points between U+0080 and U+07FF use the following 2-byte encoding:

110xxxxx 10xxxxxx

where x represent the bits of the code point being encoded.

Let's consider U+00B0. In binary, 0xB0 is 10110000. If one substitutes the bits into the above template, one gets:

11000010 10110000

In hex, this is 0xC2 0xB0.

UTF-8 is one encoding of Unicode. UTF-16 and UTF-32 are other encodings of Unicode.

Unicode defines a numeric value for each character; the degree symbol happens to be 0xB0, or 176 in decimal. Unicode does not define how those numeric values are represented.

UTF-8 encodes the value 0xB0 as two consecutive octets (bytes) with values 0xC2 0xB0.

UTF-16 encodes the same value either as 0x00 0xB0 or as 0xBo 0x00, depending on endianness.

UTF-32 encodes it as 0x00 0x00 0x00 0xB0 or as 0xB0 0x00 0x00 0x00, again depending on endianness (I suppose other orderings are possible).

Unicode (UTF-16 and UTF-32) uses the code point 0x00B0 for that character. UTF-8 doesn't allow characters at values above 127 (0x007F), as the high bit of each byte is reserved to indicate that this particular character is actually a multi-byte one.

Basic 7-bit ASCII maps directly to the first 128 characters of UTF-8. Any characters whose values are above 127 decimal (7F hex) must be "escaped" by setting the high bit and adding 1 or more extra bytes to describe.

The answers from NPE, Marc and Keith are good and above my knowledge on this topic. Still I had to read them a couple of times before I realized what this was about. Then I saw this web page that made it "click" for me.

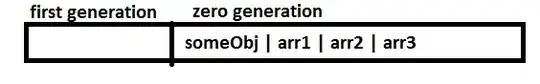

At http://www.utf8-chartable.de/, you can see the following:

Notice how it is necessary to use TWO bytes to code ONE character. Now read the accepted answer from NPE.