Say I run a simple single-threaded process like the one below:

public class SirCountALot {

public static void main(String[] args) {

int count = 0;

while (true) {

count++;

}

}

}

(This is Java because that's what I'm familiar with, but I suspect it doesn't really matter)

I have an i7 processor (4 cores, or 8 counting hyperthreading), and I'm running Windows 7 64-bit so I fired up Sysinternals Process Explorer to look at the CPU usage, and as expected I see it is using around 20% of all available CPU.

But when I toggle the option to show 1 graph per CPU, I see that instead of 1 of the 4 "cores" being used, the CPU usage is spread all over the cores:

Instead what I would expect is 1 core maxed out, but this only happens when I set the affinity for the process to a single core.

Why is the workload split over the separate cores? Wouldn't splitting the workload over several cores mess with the caching or incur other performance penalties?

Is it for the simple reason of preventing overheating of one core? Or is there some deeper reason?

Edit: I'm aware that the operating system is responsible for the scheduling, but I want to know why it "bothers". Surely from a naive viewpoint, sticking a (mostly*) single-threaded process to 1 core is the simpler & more efficient way to go?

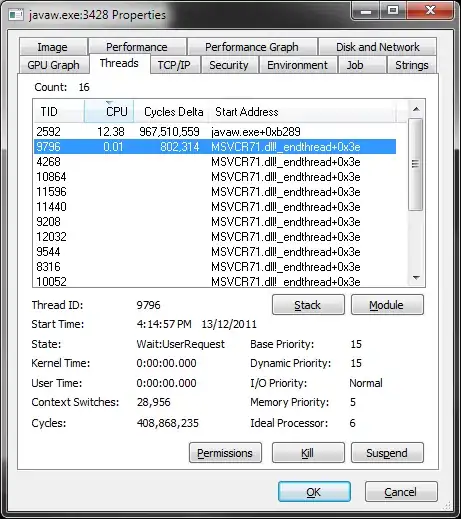

*I say mostly single-threaded because there's multiple theads here, but only 2 of them are doing anything: