This is not a texture related problem as described in other StackOverflow questions: Rendering to texture on iOS...

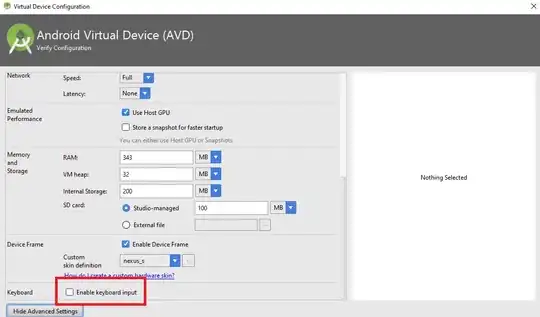

My Redraw loop:

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glTranslatef(0.0f, 0.0f, -300.0f);

glMultMatrixf(transform);

glVertexPointer(3, GL_FLOAT, MODEL_STRIDE, &model_vertices[0]);

glEnableClientState(GL_VERTEX_ARRAY);

glNormalPointer(GL_FLOAT, MODEL_STRIDE, &model_vertices[3]);

glEnableClientState(GL_NORMAL_ARRAY);

glColorPointer(4, GL_FLOAT, MODEL_STRIDE, &model_vertices[6]);

glEnableClientState(GL_COLOR_ARRAY);

glEnable(GL_COLOR_MATERIAL);

glDrawArrays(GL_TRIANGLES, 0, MODEL_NUM_VERTICES);

The result in the simulator:

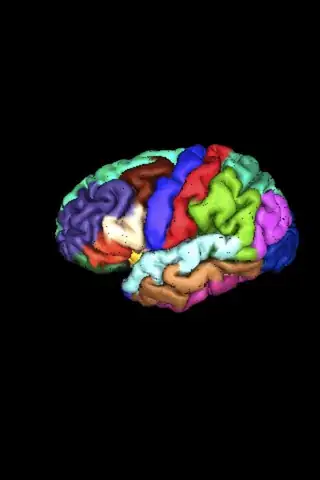

Then the result in the IPhone 4 (iOS5 using OpenGLES 1.1):

Notice the black dots, they are random as you rotate the object (brain)

The mesh has 15002 vertices and 30k triangles.

Any ideas on how to fix this jitter in the Device image?