Question 1:

I have a table with a small amount of data, but there are a lot of dynamic partitions in the daily writes, the original spark2 writes can be solved in only 2 minutes, but after upgrading to spark3 it takes 10 minutes to write completely. I learned that spark3 has added a stricter transaction mechanism when writing data to hive, is it related to this, and how should it be solved?

Question 2:

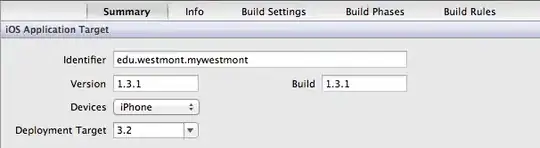

This is one of my tasks, the code is almost the same adjusted part of the log printing, respectively, using spark2 (left) and spark3 run (right), in the case of the same parameters, spark3 each job running speed are significantly better than spark3, but the total running time spark3 spent 1.2h, while spark2 only spent 44 min. Why this phenomenon occurs? What is this extra time used for?[The top part is the eventTime forspark2 r, and the bottom part is for a spark3]

I have tried setting these parameters, but none of them worked very well:

I have tried setting these parameters, but none of them worked very well:

spark.sql.hive.useDynamicPartitionWriter=falsespark.hadoop.hive.txn.manager=org.apache.hadoop.hive.sql.lockmgr.NoTxnManager

This is my first time asking a question on StackOverflow. If there is anything I did wrong, please point it out. I will correct it in time, thanks!