I want to load CSVs from a Google Cloud Storage via bq_table_upload into a BigQuery table. The CSVs can have different columns. Therefore I want to use the allowjaggedrows argument (https://cloud.google.com/bigquery/docs/loading-data-cloud-storage-csv#csv-options).

As you can read in the documentation for bq_table_upload, via ... additional arguments can be passed to the underlying API.

Additional arguments passed on to the underlying API call. snake_case names are automatically converted to camelCase.

My exemplary use case:

The following two CSVs are located in a GCS folder.

test_1.csv

| date | foo | bar |

|---|---|---|

| 2021-01-01 | 1 | a |

| 2022-02-02 | 2 | b |

test_2.csv

| date | foo | bar | lorem |

|---|---|---|---|

| 2023-03-03 | 3 | c | x |

| 2024-04-04 | 4 | d | y |

I define the fields as follows.

fields <- as_bq_fields(list(

bq_field(name = "date", type = "DATE", mode = "required"),

bq_field(name = "foo", type = "INT64"),

bq_field(name = "bar", type = "STRING"),

bq_field(name = "lorem", type = "STRING")

))

I create a partitioned table as follows.

bq_table_create(

x = "my-project.my-dataset.my-table",

fields = fields,

timePartitioning = list(timepartitioning = list(type='DAY', field= 'date'))

)

This works as expected.

I am trying to load the two CSVs as follows.

bq_table_load(

x = "my-project.my-dataset.my-table",

source_uris = "gs://my-bucket/subfolder/*.csv",

source_format = "CSV",

nskip = 1,

create_disposition = "CREATE_IF_NEEDED",

write_disposition = "WRITE_TRUNCATE",

allow_jagged_rows = TRUE

)

This fails with the following error.

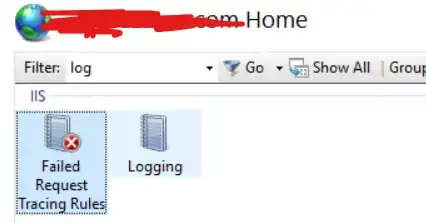

Error in `bq_job_wait()`:

! Job 'my-project.job_JM9xfaHoSqUHQgTagI_cjidNZ8qj.europe-west3' failed

✖ Error while reading data, error message: CSV processing encountered too many errors, giving up. Rows: 2; errors: 1; max bad: 0; error percent: 0 [invalid]

In the load job details I get the following error details.

When I click on Repeat load job, I can see, that the Jagged rows option is not checked.

When I select the option and run the job via browser interface, the CSVs are loaded correctly.

Therefore I assume that the argument is not passed.

Have you also observed this problem? Do I need to specify the argument in a different way in bq_table_load? Or am I misunderstanding how the function works?