I'm using an Azure Synapse Serverless SQL database.

I have a first copy activity that uses Azure SQL tables as sources and an Azure storage gen2, where I store .parquet files as sink. From these .parquet files, I use CETAS to create External tables in my Serverless SQL DB (for the context: I'm working with several Azure SQL databases, so these external tables will allow me to write cross db queries). In other words, this Serverless SQL DB is my ODS database.

I have then a second copy activity that identifies incremental changes in the source tables (using the SYS_CHANGE_VERSION of the corresponding CHANGETABLE of the source DB). This second copy activity also outputs .parquet files.

In the end, I have 2 parquet files: 1 with the full content of the source table + 1 with the content to insert or update. External tables in a Serverless SQL DB are only metadata, so no way to do DML operations on them, so my question would be: is there a way to "merge" my 2 parquet files into 1 single one (without duplicates of course) that I could use to recreate an updated external table?

Alternatively, I see that I can choose in the copy activity sink the copy method "Upsert" and provide a KeyColumn (PK of my table), but it doesn't work, saying that: "Message=INSERT operation is not allowed for this type of table." (which looks normal as the associated sink dataset is pointing on my external table, that is read only)

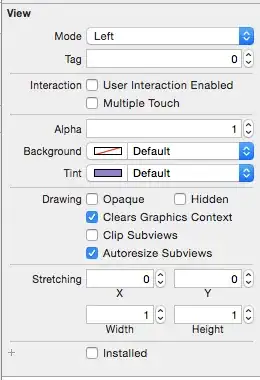

copy activity:

Any idea on how to solve this? thanks!