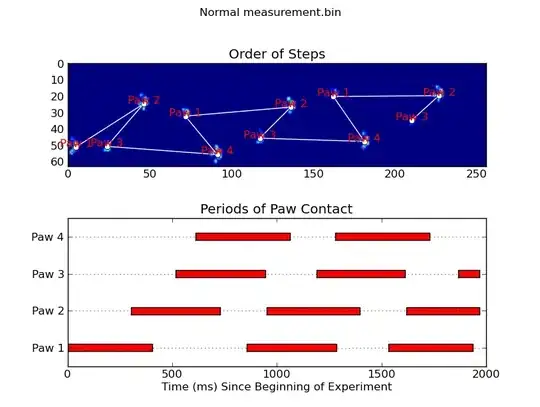

This is the pyspark dataframe

And the schema of the dataframe. Just two rows.

And the schema of the dataframe. Just two rows.

Then I want to convert it to pandas dataframe.

But it is suspended at stage 3. No result, and no information about the procedure. Why this can happen?

And when I use pandas_api, the result is the same.

Why this could happen? It bothers me the whole day.

Could anyone help me?

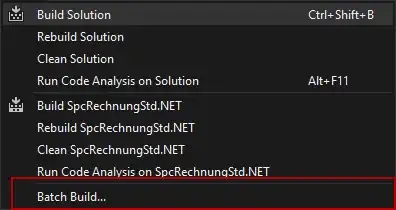

This is the package version.