Initial 'Logistic Regression_Iris_Hyperparameter Tuning' that is done in the code below because Logistic regression on Iris Data set was giving me the Accuracy score = 1 which is wrong.

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report, confusion_matrix

url = "https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data"

data = pd.read_csv(url, names=["sepal_length", "sepal_width", "petal_length", "petal_width", "species"])

X, y = data.drop("species", 1), data.species

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

def evaluate_model(model):

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

print("Classification Report:\n", classification_report(y_test, y_pred))

print("Confusion Matrix:\n", confusion_matrix(y_test, y_pred))

logreg = LogisticRegression()

evaluate_model(logreg)

param_grid = {'penalty': ['l1', 'l2'], 'C': [1, 10, 100, 1000], 'solver': ['newton-cg', 'lbfgs', 'liblinear', 'sag', 'saga'], 'max_iter': [100, 200, 500, 1000], 'class_weight': ['balanced']}

best_params = GridSearchCV(LogisticRegression(), param_grid, cv=10).fit(X_train, y_train).best_params_

best_logreg = LogisticRegression(**best_params)

evaluate_model(best_logreg)

data['predicted_species'] = best_logreg.predict(X)

species_ranges = data.groupby("predicted_species")[["petal_length", "petal_width"]].mean()

for index, row in species_ranges.iterrows():

print(f"Predicted Species: {index}\nPredicted Petal length: {row['petal_length']}\nPredicted Petal width: {row['petal_width']}\n")

species_colors = {"Iris-setosa": "blue", "Iris-versicolor": "green", "Iris-virginica": "red"}

plt.figure(figsize=(8, 6))

sns.scatterplot(x="petal_length", y="petal_width", hue="predicted_species", data=data, palette=species_colors, legend="full").set(xlabel="Petal Length", ylabel="Petal Width", title="Scatter Plot of Iris Data Points (Best Model)")

scatter = plt.gca()

[ t.set_text(l) for t, l in zip(scatter.legend_.texts, ["Setosa", "Versicolor", "Virginica"])]

plt.show()

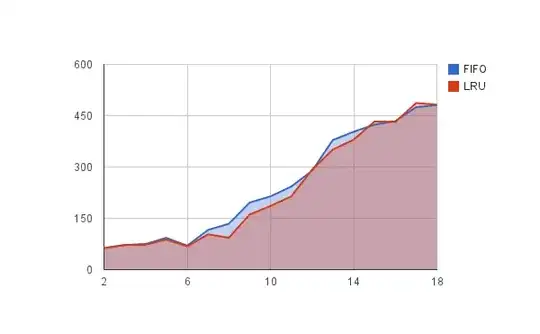

I tried K-fold cross-validation to check which classification would be best fit for the Iris dataset. Below is the code for that:

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split, cross_val_score, KFold

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.naive_bayes import GaussianNB

from sklearn.ensemble import GradientBoostingClassifier

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# Load the Iris dataset

iris = load_iris()

X, y = iris.data, iris.target

# Split the data into training and test sets with stratified sampling

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42, stratify=y)

# Scale the features

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

# Define the classifiers to use for evaluation

classifiers = {

'Logistic Regression': LogisticRegression(max_iter=1000),

'Support Vector Machine': SVC(),

'Decision Tree': DecisionTreeClassifier(),

'Random Forest': RandomForestClassifier(),

'K-Nearest Neighbors': KNeighborsClassifier(),

'Naive Bayes': GaussianNB(),

'Gradient Boosting': GradientBoostingClassifier()

}

score_names = ['accuracy', 'precision_weighted', 'recall_weighted', 'f1_weighted']

def evaluate_model(clf_name, clf):

train_scores = [cross_val_score(clf, X_train_scaled, y_train, cv=KFold(n_splits=10, shuffle=True, random_state=42), scoring=score).mean() for score in score_names]

clf.fit(X_train_scaled, y_train)

test_scores = [accuracy_score(y_test, clf.predict(X_test_scaled)),

precision_score(y_test, clf.predict(X_test_scaled), average='weighted'),

recall_score(y_test, clf.predict(X_test_scaled), average='weighted'),

f1_score(y_test, clf.predict(X_test_scaled), average='weighted')]

return train_scores, test_scores

train_scores = []

test_scores = []

for clf_name, clf in classifiers.items():

train_score, test_score = evaluate_model(clf_name, clf)

train_scores.append(train_score)

test_scores.append(test_score)

train_scores = np.array(train_scores)

test_scores = np.array(test_scores)

x_values = np.arange(len(classifiers))

score_labels = ['Accuracy', 'Precision', 'Recall', 'F1 Score']

fig, axes = plt.subplots(4, 1, figsize=(12, 16))

for ax, score_type in zip(axes, range(4)):

ax.plot(x_values, train_scores[:, score_type], marker='o', label='Train')

ax.plot(x_values, test_scores[:, score_type], marker='o', linestyle='dashed', label='Test')

ax.set_ylabel(score_labels[score_type])

ax.set_title(f'{score_labels[score_type]} Performance of Classifiers on Iris Dataset')

ax.set_xticks(x_values)

ax.set_xticklabels(classifiers.keys(), rotation=45, ha='right')

ax.legend()

plt.tight_layout()

plt.show()

Where am I wrong and how should I fix this? I have been trying to fix this issue for so long. What should I do? The manager is not helping.**

P.S: I have tried to shorten the code as much as possible which might've made some output different, but my overall issue is the same.