I created a table including location such as:

wasb://<container>@<storageaccount>.blob.core.windows.net/foldername

We have updated access to storage accounts to use abfss

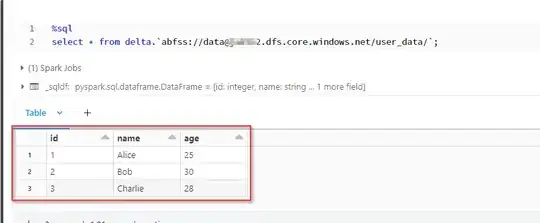

I am trying to execute the following command:

alter table mydatabase.mytable

set location ' abfss://<container>@<storageaccount>.dfs.core.windows.net/foldername

I am getting the error:

Failure to initialize configuration for storage account <storageaccount>.dfs.core.windows.net: Invalid configuration value detected for fs.azure.account.keyInvalid configuration value detected for fs.azure.account.key

On the cluster I have changed:

spark.hadoop.fs.azure.account.key.<storageaccount>.blob.core.windows.net {{secrets/<proyect>/<storageaccount>}}

to this:

spark.hadoop.fs.azure.account.key.<storageaccount>.dfs.core.windows.net {{secrets/<proyect>/<storageaccount>}}