I'm trying for some time to solve this issue and it will be nice if anyone had some similar case.I'm building some program on aws lambda and need to use scikit-learn package.

The issue is that this package is very big so its difficult to code while uploading this package. A solution I found was to divide the package to seperate layers and upload them, add these layers to 2 functions(i can only upload 50mb at a time so i have 6 layers i divided between 2 functions) then to create from these 2 functions 2 layers and add them to my main function.

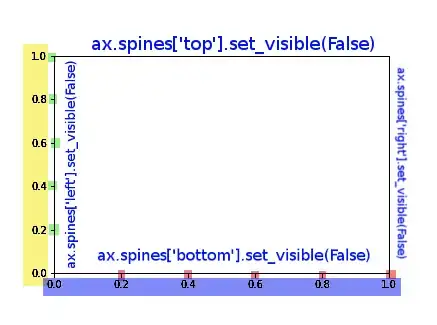

I did so but i cannot manage to work with the package neither with the functions. please see the way i implemented the code ->

from lambdaA_function import lambdaA_handler #from filename import method

from lambdaB_function import lambdaB_handler #from filename import method

import json

from sklearn.feature_extraction.text import TfidfVectorizer

if anyone have other workaround i will be happy to hear