I have an existing case:

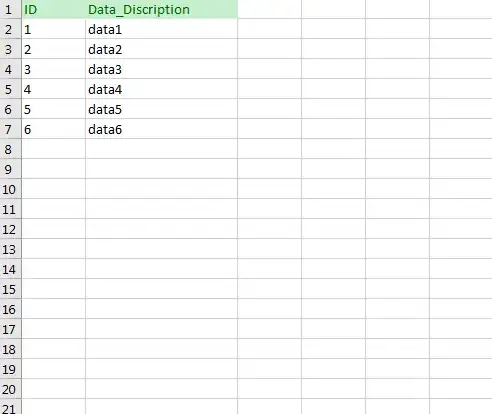

where Entire/Full data is read daily from multiple hive tables, Which is processed/transformed (join, aggregation, filter, etc) as mentioned in SQL query.

These SQL query are mentioned in series of YAML files , let's say there are 3 YAML file.

each result is saved to a temp view and subsequent SQL query uses the previous generated temp view until the last SQL from last YAMl is executed,

The final result is written to HIVE TABLE in format : hive_table_name_epoch.

Data Volume: ~400,000,000 (400 Million)

Data Size: ~ 400 Gib

Execution Time: ~25 min

New use case: Trying to integrate Open Table Format (Delta lake or Apache Hudi). Aim: is to maintain only one hive table instead of creating hive table each day and to avoid writing entire data again and again, Instead write only required updates, new inserts and deletes

Data Volume: ~400,000,000 (400 Million)

Data Size: ~ 400 Gib

Execution Time: ~ 1.10 hr

Case 2: Using Apache Hudi Data Volume: ~400,000,000 (400 Million)

Data Size: ~ 400 Gib

Execution Time: ~ 40 min

Question: Still the older concept of processing and writing entire data daily performs far better, than using open table format.

Is the existing case really feasibly to integrate with incremental concept ?

May be if we some how make our data CDC , then it would make sense.