Let's say you have measured a bit b ∈ {0, 1} and you know that with probability p ∈ [0, 1] that your measurement might be wrong, i.e. that the measurement is correct by probability 1-p. How much information, on average, is contained in one such bit?

Is it simply (1 bit) * (1-p) = (1-p) bits? (this guess was wrong)

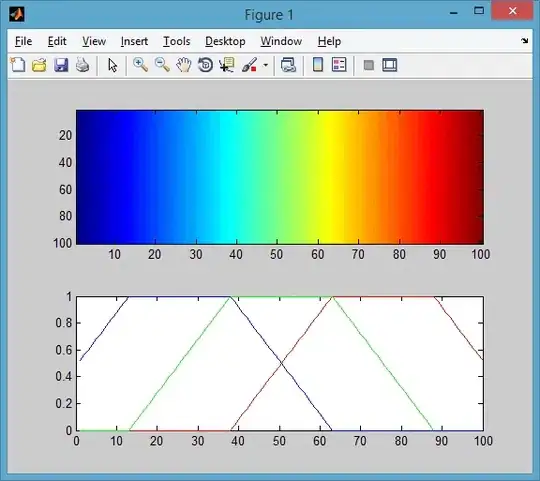

I think you can find the result if you see it as a binary symmetric channel. That means a correct bit value gets sent, possibly wrong bit value is received at failure probability p. How to calculate the amount of information transferred on average?