I have a remote Standalone Spark cluster running in 2 Docker containers, spark-master and spark-worker. I am trying to test a simple Python program to test connectivity to Spark, but I always get the following error:

WARN TaskSchedulerImpl: Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient resources

Here is the code:

from pyspark.sql import SparkSession

if __name__ == '__main__':

spark = SparkSession.builder.appName('test') \

.master('spark://192.168.1.169:7077') \

.config("spark.executor.memory", "512m") \

.config('spark.cores.max', '1') \

.config("spark.executor.cores", "1") \

.config("spark.executor.instances", "1") \

.getOrCreate()

data = [("A", 1), ("B", 2), ("C", 3)]

columns = ["Letter", "Number"]

df = spark.createDataFrame(data, columns)

df.show()

spark.stop()

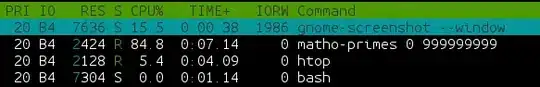

When I run this program I can see a running application on Spark GUI page. Here is the screenshot:

Obviously, there are plenty of resources for my simple program. I have also tried tweaking the configurations parameters, but it doesn't help.

I have looked at executor summary and at the master logs in Docker containers and it seems like executors are created and exited frequently one after another approximately every 3 seconds.

23/08/07 11:39:16 INFO Master: Registering app test

23/08/07 11:39:16 INFO Master: Registered app test with ID app-20230807113916-0087

23/08/07 11:39:16 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:16 INFO Master: Application app-20230807113916-0087 requested executors: Map(Profile: id = 0, executor resources: cores -> name: cores, amount: 1, script: , vendor: ,memory -> name: memory, amount: 512, script: , vendor: ,offHeap -> name: offHeap, amount: 0, script: , vendor: , task resources: cpus -> name: cpus, amount: 1.0 -> 1).

23/08/07 11:39:16 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:16 INFO Master: Launching executor app-20230807113916-0087/0 on worker worker-20230705044801-172.26.0.3-35329

23/08/07 11:39:16 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:19 INFO Master: Removing executor app-20230807113916-0087/0 because it is EXITED

23/08/07 11:39:19 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:19 INFO Master: Launching executor app-20230807113916-0087/1 on worker worker-20230705044801-172.26.0.3-35329

23/08/07 11:39:19 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:21 INFO Master: Removing executor app-20230807113916-0087/1 because it is EXITED

23/08/07 11:39:21 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:21 INFO Master: Launching executor app-20230807113916-0087/2 on worker worker-20230705044801-172.26.0.3-35329

23/08/07 11:39:21 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:24 INFO Master: Removing executor app-20230807113916-0087/2 because it is EXITED

23/08/07 11:39:24 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:24 INFO Master: Launching executor app-20230807113916-0087/3 on worker worker-20230705044801-172.26.0.3-35329

23/08/07 11:39:24 INFO Master: Start scheduling for app app-20230807113916-0087 with rpId: 0

23/08/07 11:39:26 INFO Master: Removing executor app-20230807113916-0087/3 because it is EXITED

And worker logs:

23/08/07 11:39:16 INFO Worker: Asked to launch executor app-20230807113916-0087/0 for test

23/08/07 11:39:16 INFO SecurityManager: Changing view acls to: spark

23/08/07 11:39:16 INFO SecurityManager: Changing modify acls to: spark

23/08/07 11:39:16 INFO SecurityManager: Changing view acls groups to:

23/08/07 11:39:16 INFO SecurityManager: Changing modify acls groups to:

23/08/07 11:39:16 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: spark; groups with view permissions: EMPTY; users with modify permissions: spark; groups with modify permissions: EMPTY

23/08/07 11:39:16 INFO ExecutorRunner: Launch command: "/opt/bitnami/java/bin/java" "-cp" "/opt/bitnami/spark/conf/:/opt/bitnami/spark/jars/*" "-Xmx512M" "-Dspark.driver.port=57417" "-Djava.net.preferIPv6Addresses=false" "-XX:+IgnoreUnrecognizedVMOptions" "--add-opens=java.base/java.lang=ALL-UNNAMED" "--add-opens=java.base/java.lang.invoke=ALL-UNNAMED" "--add-opens=java.base/java.lang.reflect=ALL-UNNAMED" "--add-opens=java.base/java.io=ALL-UNNAMED" "--add-opens=java.base/java.net=ALL-UNNAMED" "--add-opens=java.base/java.nio=ALL-UNNAMED" "--add-opens=java.base/java.util=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED" "--add-opens=java.base/sun.nio.ch=ALL-UNNAMED" "--add-opens=java.base/sun.nio.cs=ALL-UNNAMED" "--add-opens=java.base/sun.security.action=ALL-UNNAMED" "--add-opens=java.base/sun.util.calendar=ALL-UNNAMED" "--add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED" "-Djdk.reflect.useDirectMethodHandle=false" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@Artyom.mshome.net:57417" "--executor-id" "0" "--hostname" "172.26.0.3" "--cores" "1" "--app-id" "app-20230807113916-0087" "--worker-url" "spark://Worker@172.26.0.3:35329" "--resourceProfileId" "0"

23/08/07 11:39:19 INFO Worker: Executor app-20230807113916-0087/0 finished with state EXITED message Command exited with code 1 exitStatus 1

23/08/07 11:39:19 INFO ExternalShuffleBlockResolver: Clean up non-shuffle and non-RDD files associated with the finished executor 0

23/08/07 11:39:19 INFO ExternalShuffleBlockResolver: Executor is not registered (appId=app-20230807113916-0087, execId=0)

23/08/07 11:39:19 INFO Worker: Asked to launch executor app-20230807113916-0087/1 for test

23/08/07 11:39:19 INFO SecurityManager: Changing view acls to: spark

23/08/07 11:39:19 INFO SecurityManager: Changing modify acls to: spark

23/08/07 11:39:19 INFO SecurityManager: Changing view acls groups to:

23/08/07 11:39:19 INFO SecurityManager: Changing modify acls groups to:

23/08/07 11:39:19 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: spark; groups with view permissions: EMPTY; users with modify permissions: spark; groups with modify permissions: EMPTY

23/08/07 11:39:19 INFO ExecutorRunner: Launch command: "/opt/bitnami/java/bin/java" "-cp" "/opt/bitnami/spark/conf/:/opt/bitnami/spark/jars/*" "-Xmx512M" "-Dspark.driver.port=57417" "-Djava.net.preferIPv6Addresses=false" "-XX:+IgnoreUnrecognizedVMOptions" "--add-opens=java.base/java.lang=ALL-UNNAMED" "--add-opens=java.base/java.lang.invoke=ALL-UNNAMED" "--add-opens=java.base/java.lang.reflect=ALL-UNNAMED" "--add-opens=java.base/java.io=ALL-UNNAMED" "--add-opens=java.base/java.net=ALL-UNNAMED" "--add-opens=java.base/java.nio=ALL-UNNAMED" "--add-opens=java.base/java.util=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED" "--add-opens=java.base/sun.nio.ch=ALL-UNNAMED" "--add-opens=java.base/sun.nio.cs=ALL-UNNAMED" "--add-opens=java.base/sun.security.action=ALL-UNNAMED" "--add-opens=java.base/sun.util.calendar=ALL-UNNAMED" "--add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED" "-Djdk.reflect.useDirectMethodHandle=false" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@Artyom.mshome.net:57417" "--executor-id" "1" "--hostname" "172.26.0.3" "--cores" "1" "--app-id" "app-20230807113916-0087" "--worker-url" "spark://Worker@172.26.0.3:35329" "--resourceProfileId" "0"

23/08/07 11:39:21 INFO Worker: Executor app-20230807113916-0087/1 finished with state EXITED message Command exited with code 1 exitStatus 1

23/08/07 11:39:21 INFO ExternalShuffleBlockResolver: Clean up non-shuffle and non-RDD files associated with the finished executor 1

23/08/07 11:39:21 INFO ExternalShuffleBlockResolver: Executor is not registered (appId=app-20230807113916-0087, execId=1)

23/08/07 11:39:21 INFO Worker: Asked to launch executor app-20230807113916-0087/2 for test

23/08/07 11:39:21 INFO SecurityManager: Changing view acls to: spark

23/08/07 11:39:21 INFO SecurityManager: Changing modify acls to: spark

23/08/07 11:39:21 INFO SecurityManager: Changing view acls groups to:

23/08/07 11:39:21 INFO SecurityManager: Changing modify acls groups to:

23/08/07 11:39:21 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: spark; groups with view permissions: EMPTY; users with modify permissions: spark; groups with modify permissions: EMPTY

23/08/07 11:39:21 INFO ExecutorRunner: Launch command: "/opt/bitnami/java/bin/java" "-cp" "/opt/bitnami/spark/conf/:/opt/bitnami/spark/jars/*" "-Xmx512M" "-Dspark.driver.port=57417" "-Djava.net.preferIPv6Addresses=false" "-XX:+IgnoreUnrecognizedVMOptions" "--add-opens=java.base/java.lang=ALL-UNNAMED" "--add-opens=java.base/java.lang.invoke=ALL-UNNAMED" "--add-opens=java.base/java.lang.reflect=ALL-UNNAMED" "--add-opens=java.base/java.io=ALL-UNNAMED" "--add-opens=java.base/java.net=ALL-UNNAMED" "--add-opens=java.base/java.nio=ALL-UNNAMED" "--add-opens=java.base/java.util=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED" "--add-opens=java.base/sun.nio.ch=ALL-UNNAMED" "--add-opens=java.base/sun.nio.cs=ALL-UNNAMED" "--add-opens=java.base/sun.security.action=ALL-UNNAMED" "--add-opens=java.base/sun.util.calendar=ALL-UNNAMED" "--add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED" "-Djdk.reflect.useDirectMethodHandle=false" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@Artyom.mshome.net:57417" "--executor-id" "2" "--hostname" "172.26.0.3" "--cores" "1" "--app-id" "app-20230807113916-0087" "--worker-url" "spark://Worker@172.26.0.3:35329" "--resourceProfileId" "0"

However, I was successful with running an example jar inside the Docker container.

Please tell me how can I fix the error. I am using Spark 3.4.1 on all nodes and in the Python program.

UPD: here are the logs from worker/app:

Spark Executor Command: "/opt/bitnami/java/bin/java" "-cp"

"/opt/bitnami/spark/conf/:/opt/bitnami/spark/jars/*" "-Xmx1024M" "-Dspark.port.maxRetries=65000" "-Dspark.driver.port=51772" "-Djava.net.preferIPv6Addresses=false" "-XX:+IgnoreUnrecognizedVMOptions" "--add-opens=java.base/java.lang=ALL-UNNAMED" "--add-opens=java.base/java.lang.invoke=ALL-UNNAMED" "--add-opens=java.base/java.lang.reflect=ALL-UNNAMED" "--add-opens=java.base/java.io=ALL-UNNAMED" "--add-opens=java.base/java.net=ALL-UNNAMED" "--add-opens=java.base/java.nio=ALL-UNNAMED" "--add-opens=java.base/java.util=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent=ALL-UNNAMED" "--add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED" "--add-opens=java.base/sun.nio.ch=ALL-UNNAMED" "--add-opens=java.base/sun.nio.cs=ALL-UNNAMED" "--add-opens=java.base/sun.security.action=ALL-UNNAMED" "--add-opens=java.base/sun.util.calendar=ALL-UNNAMED" "--add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED" "-Djdk.reflect.useDirectMethodHandle=false" "org.apache.spark.executor.CoarseGrainedExecutorBackend" "--driver-url" "spark://CoarseGrainedScheduler@172.17.0.2:51772" "--executor-id" "0" "--hostname" "172.26.0.3" "--cores" "8" "--app-id" "app-20230828045524-0147" "--worker-url" "spark://Worker@172.26.0.3:40457" "--resourceProfileId" "0"

========================================

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

23/08/28 04:55:25 INFO CoarseGrainedExecutorBackend: Started daemon with process name: 27005@6181476d5774

23/08/28 04:55:25 INFO SignalUtils: Registering signal handler for TERM

23/08/28 04:55:25 INFO SignalUtils: Registering signal handler for HUP

23/08/28 04:55:25 INFO SignalUtils: Registering signal handler for INT

23/08/28 04:55:25 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

23/08/28 04:55:26 INFO SecurityManager: Changing view acls to: spark,root

23/08/28 04:55:26 INFO SecurityManager: Changing modify acls to: spark,root

23/08/28 04:55:26 INFO SecurityManager: Changing view acls groups to:

23/08/28 04:55:26 INFO SecurityManager: Changing modify acls groups to:

23/08/28 04:55:26 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: spark, root; groups with view permissions: EMPTY; users with modify permissions: spark, root; groups with modify permissions: EMPTY

Exception in thread "main" java.lang.reflect.UndeclaredThrowableException

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1894)

at org.apache.spark.deploy.SparkHadoopUtil.runAsSparkUser(SparkHadoopUtil.scala:62)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.run(CoarseGrainedExecutorBackend.scala:428)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.main(CoarseGrainedExecutorBackend.scala:417)

at org.apache.spark.executor.CoarseGrainedExecutorBackend.main(CoarseGrainedExecutorBackend.scala)

Caused by: org.apache.spark.SparkException: Exception thrown in awaitResult:

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:322)

at org.apache.spark.rpc.RpcTimeout.awaitResult(RpcTimeout.scala:75)

at org.apache.spark.rpc.RpcEnv.setupEndpointRefByURI(RpcEnv.scala:102)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$9(CoarseGrainedExecutorBackend.scala:448)

at scala.runtime.java8.JFunction1$mcVI$sp.apply(JFunction1$mcVI$sp.java:23)

at scala.collection.TraversableLike$WithFilter.$anonfun$foreach$1(TraversableLike.scala:985)

at scala.collection.immutable.Range.foreach(Range.scala:158)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:984)

at org.apache.spark.executor.CoarseGrainedExecutorBackend$.$anonfun$run$7(CoarseGrainedExecutorBackend.scala:446)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:63)

at org.apache.spark.deploy.SparkHadoopUtil$$anon$1.run(SparkHadoopUtil.scala:62)

at java.base/java.security.AccessController.doPrivileged(AccessController.java:712)

at java.base/javax.security.auth.Subject.doAs(Subject.java:439)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)

... 4 more

Caused by: java.io.IOException: Failed to connect to /172.17.0.2:51772

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:284)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:214)

at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:226)

at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:204)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:202)

at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:198)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

at java.base/java.lang.Thread.run(Thread.java:833)

Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection timed out: /172.17.0.2:51772

Caused by: java.net.ConnectException: Connection timed out

at java.base/sun.nio.ch.Net.pollConnect(Native Method)

at java.base/sun.nio.ch.Net.pollConnectNow(Net.java:672)

at java.base/sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:946)

at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:337)

at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:334)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:776)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:724)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:650)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:562)

at io.netty.util.concurrent.SingleThreadEventExecutor$4.run(SingleThreadEventExecutor.java:997)

at io.netty.util.internal.ThreadExecutorMap$2.run(ThreadExecutorMap.java:74)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.base/java.lang.Thread.run(Thread.java:833)