I have installed several Generative Pretrained Transformer (GPT) models on my local system for fine-tuning purposes, both within Python in Visual Studio Code and via the Command Prompt window during code execution. The installed models include LLaMA, XLNET_base_cased, davinci, gpt2, gpt2-medium, meta-llama/Llama-2-7b-chat-hf, groovy, and more. Some of these models are from "Hugging face transformers"

I have also installed related packages such as requirements.txt, sentence_transformers, chromaDB, and others. Some packages were downloaded using PIP installations, as shown in the example below:

pip install sentence_transformers

pip install transformers[torch]

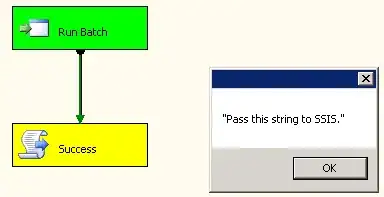

I am now facing storage constraints and need guidance on how to effectively remove these installed GPT models, related packages, and associated files to free up space. To provide context, I am sharing a screenshot of a GPT model downloaded through Python in Visual Studio Code:

I appreciate any assistance in efficiently managing and reclaiming storage space by safely uninstalling unnecessary GPT models, packages, and files. Thank you.

I have explored the contents of the python310 folder extensively; however, I am unable to locate the directory where the downloaded models are stored. Kindly provide guidance on identifying the storage location of these models, and I request support in deleting both the models and associated packages.

Furthermore, if feasible, I would appreciate assistance in removing any unnecessary packages to optimize space utilization.