Context:

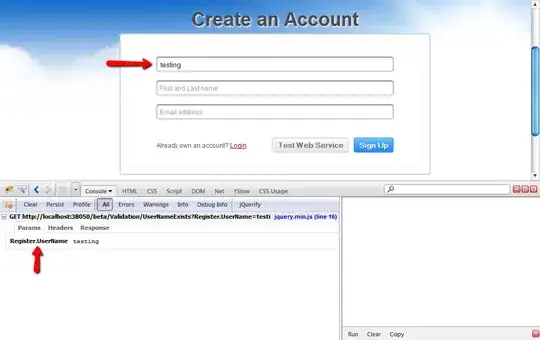

I have a large collection of videos recorded by my phone's camera, which is taking up a significant amount of space. Recently, I noticed that when I uploaded a video to Google Drive and then downloaded it again using IDM (by clicking on the pop-up that IDM displays when it detects something that can be downloaded here's what i mean), the downloaded video retained the same visual quality but occupied much less space. Upon further research, I discovered that Google re-encodes uploaded videos using H.264 video encoding, and I believe I can achieve similar compression using FFmpeg.

Problem:

Despite experimenting with various FFmpeg commands, I haven't been able to replicate Google Drive's compression. Every attempt using -codec:v libx264 option alone resulted in videos larger than the original files.

While adjusting the -crf parameter to a higher value and opting for a faster -preset option did yield smaller file sizes, it unfortunately came at the cost of a noticeable degradation in visual quality and the appearance of some visible artifacts in the video.

Google Drive's processing, on the other hand, strikes a commendable balance, achieving a satisfactory file size without compromising visual clarity, (I should note that upon zooming in on this video, I observed some minor blurring, but it was acceptable to me).

Note:

I'm aware that using the H.265 video encoder instead of H.264 may give better results. However, to ensure fairness and avoid any potential bias, I think the optimal approach is first to find the best command using the H.264 video encoder. Once identified, I can then replace -codec:v libx264 with -codec:v libx265. This approach will ensure that the chosen command is really the best that FFMPEG can achieve, and that it is not solely influenced by the superior performance of H.265 when used from the outset.

Here's the FFMPEG command I am currently using:

ffmpeg -hide_banner -loglevel verbose ^

-i input.mp4 ^

-codec:v libx264 ^

-crf 36 -preset ultrafast ^

-codec:a libopus -b:a 112k ^

-movflags use_metadata_tags+faststart -map_metadata 0 ^

output.mp4

| Video file | Size (bytes) | Bit rate (bps) | Encoder | FFPROB - JSON |

|---|---|---|---|---|

| Original (named 'raw 1.mp4') | 31,666,777 | 10,314,710 | !!! | link |

| Without crf | 36,251,852 | 11,805,216 | Lavf60.3.100 | link |

| With crf | 10,179,113 | 3,314,772 | Lavf60.3.100 | link |

| Gdrive | 6,726,189 | 2,190,342 | link |

Those files can be found here.

Update:

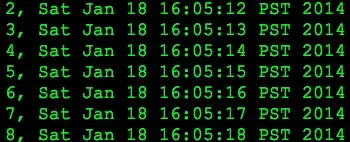

I continued my experiments with the video "raw_1.mp4" and found some interesting results that resemble those shown in this blog post, (I recommend consulting this answer).

In the following figure, I observed that using the -preset set to veryfast provided the most advantageous results, striking the optimal balance between compression ratio and compression time, (Note that a negative percentage in the compression variable indicates an increase in file size after processing):

In this figure, I used the H.264 encoder and compared the compression ratio of different outputted files resulting from seven different values of the -crf parameter (CRF values used: 25, 27, 29, 31, 33, 35, 37),

For this figure, I've switched the encoder to H.265 while maintaining the same CRF values used in the previous figure:

Based on these results, the -preset veryfast and a -crf value of 31 are my current preferred settings for FFmpeg, until they are proven to be suboptimal choices.

As a result, the FFmpeg command I'll use is as follows:

ffmpeg -hide_banner -loglevel verbose ^

-i input.mp4 ^

-codec:v libx264 ^

-crf 31 -preset veryfast ^

-codec:a libopus -b:a 112k ^

-movflags use_metadata_tags+faststart -map_metadata 0 ^

output.mp4

Note that these choices are based solely on the compression results obtained so far, and they do not take into account the visual quality of the outputted files.