I am trying to solve an image alignment problem, where images come from different sources, but should be "similar enough".

In many cases, OpenCV's findTransformECC converges, but in others, it fails, regardless of the criterion, i.e. the functions throws:

The algorithm stopped before its convergence. The correlation is going to be minimized. Images may be uncorrelated or non-overlapped in function 'cv::findTransformECC'

An MRE:

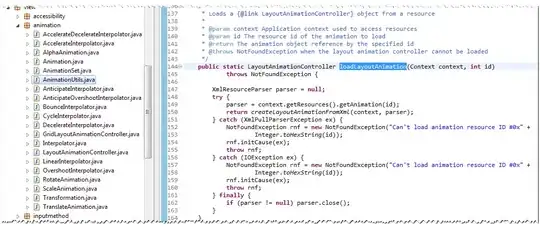

img = cv2.imread(path_img, cv2.IMREAD_GRAYSCALE)

ref = cv2.imread(path_ref, cv2.IMREAD_GRAYSCALE)

# ... some preprocessing

criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 1000000, 1e-9)

# throws, or return indentity

warp = np.eye(2, 3, dtype=np.float32)

_, warp = cv2.findTransformECC(ref, img, warp, cv2.MOTION_EUCLIDEAN, criteria)

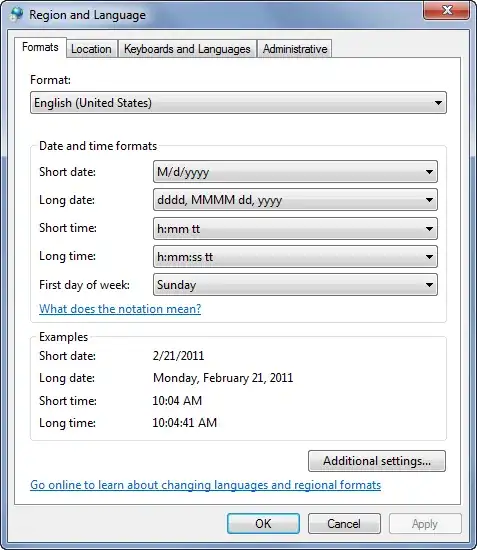

The images passed to findTransformECC:

I skimmed the paper "Parametric Image Alignment using Enhanced Correlation Coefficient Maximization", Georgios D. Evangelidis and Emmanouil Z. Psarakis, and from what I read, the algorithm does a global photometric alignment i.e. without using features, which, intuitively, tells me my above images should work with such algorithm.

Am I missing something from the paper that would explain why this does not work? Could that be considered a bug? Or should I use a completely different approach? A basic photometric alignment does not feel like a difficult problem to solve, but the absence of any other implementation in OpenCV makes me doubt that. Otherwise I could probably try my luck at this.

Notes:

Since my images come from different sources, the shapes are not similar enough that a feature-based alignment would work, meaning the other algorithms implemented in OpenCV, AFAIK, cannot help me solve this problem.

In the meantime, I have used more or less successfully

cv::connectedComponentsWithStatsto manually detect my pixel blobs. Some images however contain artifacts, which, in the case of a global alignment, would have no effect on the resulting offset, but with my current solution, force me to match / filter the pixel blobs, which is obviously more error prone than "give me the vectortthat minimizes the difference between those images".