I'm trying to load some documents, powerpoints and text to train my custom LLm using Langchain.

When I run it I come to a weird error message where it tells I don't have "tokenizers" and "taggers" packages (folders).

I've read the docs, asked Langchain chatbot, pip install nltk, uninstall, pip install nltk without dependencies, added them with nltk.download(), nltk.download("punkt"), nltk.download("all"),... Did also manually put on the path: nltk.data.path = ['C:\Users\zaesa\AppData\Roaming\nltk_data'] and added all the folders. Added the tokenizers folder and taggers folder from the github repo:https://github.com/nltk/nltk_data/tree/gh-pages/packages. Everything. Also asked on the Github repo. Nothing, no success.

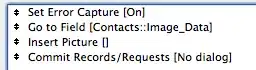

Here the code of the file I try to run:

from nltk.tokenize import sent_tokenize

from langchain.document_loaders import UnstructuredPowerPointLoader, TextLoader, UnstructuredWordDocumentLoader

from dotenv import load_dotenv, find_dotenv

import os

import openai

import sys

import nltk

nltk.data.path = ['C:\\Users\\zaesa\\AppData\\Roaming\\nltk_data']

nltk.download(

'punkt', download_dir='C:\\Users\\zaesa\\AppData\\Roaming\\nltk_data')

sys.path.append('../..')

_ = load_dotenv(find_dotenv()) # read local .env file

openai.api_key = os.environ['OPENAI_API_KEY']

# Replace with the actual folder paths

folder_path_docx = "DB\\ DB VARIADO\\DOCS"

folder_path_txt = "DB\\BLOG-POSTS"

folder_path_pptx_1 = "DB\\PPT DAY JUNIO"

folder_path_pptx_2 = "DB\\DB VARIADO\\PPTX"

# Create a list to store the loaded content

loaded_content = []

# Load and process DOCX files

for file in os.listdir(folder_path_docx):

if file.endswith(".docx"):

file_path = os.path.join(folder_path_docx, file)

loader = UnstructuredWordDocumentLoader(file_path)

docx = loader.load()

loaded_content.extend(docx)

# Load and process TXT files

for file in os.listdir(folder_path_txt):

if file.endswith(".txt"):

file_path = os.path.join(folder_path_txt, file)

loader = TextLoader(file_path, encoding='utf-8')

text = loader.load()

loaded_content.extend(text)

# Load and process PPTX files from folder 1

for file in os.listdir(folder_path_pptx_1):

if file.endswith(".pptx"):

file_path = os.path.join(folder_path_pptx_1, file)

loader = UnstructuredPowerPointLoader(file_path)

slides_1 = loader.load()

loaded_content.extend(slides_1)

# Load and process PPTX files from folder 2

for file in os.listdir(folder_path_pptx_2):

if file.endswith(".pptx"):

file_path = os.path.join(folder_path_pptx_2, file)

loader = UnstructuredPowerPointLoader(file_path)

slides_2 = loader.load()

loaded_content.extend(slides_2)

# Process the loaded content as needed

# for content in loaded_content:

# Process the content

# pass

# print the first 500 characters of the first document

print(loaded_content[0].page_content)

print(nltk.data.path)

# Get the list of installed packages

installed_packages = nltk.downloader.Downloader(

download_dir='C:\\Users\\zaesa\\AppData\\Roaming\\nltk_data').packages()

# Print the list of installed packages

print(installed_packages)

sent_tokenize("Hello. How are you? I'm well.")

When running the file I get:

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading taggers: Package 'taggers' not found in

[nltk_data] index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading taggers: Package 'taggers' not found in

[nltk_data] index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading taggers: Package 'taggers' not found in

[nltk_data] index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading taggers: Package 'taggers' not found in

[nltk_data] index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading tokenizers: Package 'tokenizers' not found

[nltk_data] in index

[nltk_data] Error loading taggers: Package 'taggers' not found in

[nltk_data] index

- HERE SOME TEXT -

['C:\\Users\\zaesa\\AppData\\Roaming\\nltk_data']

dict_values([<Package perluniprops>, <Package mwa_ppdb>, <Package punkt>, <Package rslp>, <Package porter_test>, <Package snowball_data>, <Package maxent_ne_chunker>, <Package moses_sample>, <Package bllip_wsj_no_aux>, <Package word2vec_sample>, <Package wmt15_eval>, <Package spanish_grammars>, <Package sample_grammars>, <Package large_grammars>, <Package book_grammars>, <Package basque_grammars>, <Package maxent_treebank_pos_tagger>, <Package averaged_perceptron_tagger>, <Package averaged_perceptron_tagger_ru>, <Package universal_tagset>, <Package vader_lexicon>, <Package lin_thesaurus>, <Package movie_reviews>, <Package problem_reports>, <Package pros_cons>, <Package masc_tagged>, <Package sentence_polarity>, <Package webtext>, <Package nps_chat>, <Package city_database>, <Package europarl_raw>, <Package biocreative_ppi>, <Package verbnet3>, <Package pe08>, <Package pil>, <Package crubadan>, <Package gutenberg>, <Package propbank>, <Package machado>, <Package state_union>, <Package twitter_samples>, <Package semcor>, <Package wordnet31>, <Package extended_omw>, <Package names>, <Package ptb>, <Package nombank.1.0>, <Package floresta>, <Package comtrans>, <Package knbc>, <Package mac_morpho>, <Package swadesh>, <Package rte>, <Package toolbox>, <Package jeita>, <Package product_reviews_1>, <Package omw>, <Package wordnet2022>, <Package sentiwordnet>, <Package product_reviews_2>, <Package abc>, <Package wordnet2021>, <Package udhr2>, <Package senseval>, <Package words>, <Package framenet_v15>, <Package unicode_samples>, <Package kimmo>, <Package framenet_v17>, <Package chat80>, <Package qc>, <Package inaugural>, <Package wordnet>, <Package stopwords>, <Package verbnet>, <Package shakespeare>, <Package ycoe>, <Package ieer>, <Package cess_cat>, <Package switchboard>, <Package comparative_sentences>, <Package subjectivity>, <Package udhr>, <Package pl196x>, <Package paradigms>, <Package gazetteers>, <Package timit>, <Package treebank>, <Package sinica_treebank>, <Package opinion_lexicon>, <Package ppattach>, <Package dependency_treebank>, <Package reuters>, <Package genesis>, <Package cess_esp>, <Package conll2007>, <Package nonbreaking_prefixes>, <Package dolch>, <Package smultron>, <Package alpino>, <Package wordnet_ic>, <Package brown>, <Package bcp47>, <Package panlex_swadesh>, <Package conll2000>, <Package universal_treebanks_v20>, <Package brown_tei>, <Package cmudict>, <Package omw-1.4>, <Package mte_teip5>, <Package indian>, <Package conll2002>, <Package tagsets>])

And here is how my folders structure from nltk_data looks like:

Any help will be really appreciated because it is a serious work I have the commitment to achieve.

Will be full available for solving this issue. Let me know if you need anything else!