How to do constrained Least Squares only using numpy. Is there any way to incorporate constraints into numpy.linalg.lstsq() or is there any numpy+Python workaround to do constrained Least Squares? I know that I can do this easily with cvxpy and scipy.optimize, but this optimization will be a part of numba JITed function, and those two are not supported by numba.

EDIT: Here is a dummy example of a problem I want to solve

arr_true = np.vstack([np.random.normal(0.3, 2, size=20),

np.random.normal(1.4, 2, size=20),

np.random.normal(4.2, 2, size=20)]).transpose()

x_true = np.array([0.3, 0.35, 0.35])

y = arr_true @ x_true

def problem():

noisy_arr = np.vstack([np.random.normal(0.3, 2, size=20),

np.random.normal(1.4, 2, size=20),

np.random.normal(4.2, 2, size=20)]).transpose()

x = cvxpy.Variable(3)

objective = cvxpy.Minimize(cvxpy.sum_squares(noisy_arr @ x - y))

constraints = [0 <= x, x <= 1, cvxpy.sum(x) == 1]

prob = cvxpy.Problem(objective, constraints)

result = prob.solve()

output = prob.value

args = x.value

return output, args

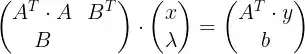

Essentially, my problem is to minimize $Ax-y$ subject to $x_1+x_2+x_3 = 1$ and $\forall x_i, 0 \leq x_i \leq 1$.