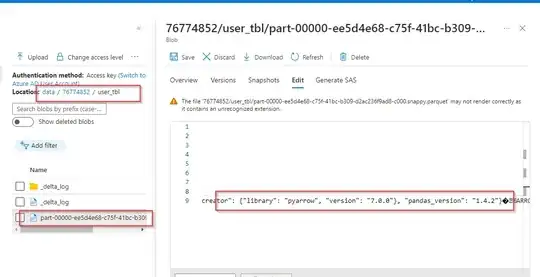

I have one table in databricks. Let's call it 'tableA'. This table is not created by me. So to get where its files are stored I checked storage-location for that table. I found it to be Azure BLOB storage. When I checked that particular directory I found there is one parquet file and folder called _delta_log. For some reason I wanted to anonymize values for some of the columns in this table. So I replaced parquet file of the delta table in the BLOB storage with parquet file with anonymized values and exact same column names and file name. Size of the original file is 12716 Bytes and this new file is 13189 Bytes. After doing this I am getting FileReadException while running select query on this table.

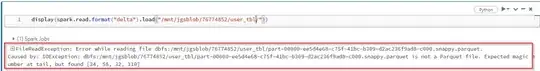

Exception says "file is not a parquet file. Expected magic number at the tail but got [47,56,65,65]".

I know I can update the table directly and anonymize values, but for some reason I cannot do that(this reason is not programmatic).

So I opened original file in notepad++ and checked 4 characters before end position of the file i.e 12713 to 12716 and they are "PAR1" which is magic number for parquet file. Then I opened new file in notepad++ and checked that 4 characters at end of the file are "PAR1" thus proving that new file is in fact parquet file. But still querying table in databricks gives above mentioned exception. So I checked characters at position 12713 to 12716 in new file I found them to be /8AA. ASCII code for this characters are 47,56,65,65 as it is indicated in exception. I have doubt that after I am manually replacing parquet file of delta table Delta table is still trying to check magic number at end position of older file not at end position of new file which would be 13185 to 12189. I think somewhere delta table is storing size of older file and trying to check at the end for Parquet magic number but for new file end position is shifted forward because increase in file size. And then it gets some different characters and throws error.

How can I let delta table know of this change? Is there anyway using which I would be able to replace the parquet file of delta table and be able to query delta table in databricks?