The problem is that the WAV file format requires the header to be written with the correct audio properties before writing the audio data itself.

- Modify the

send_audio function to write the WAV file header before writing the audio data. Call the send_audio function with the audio_queue. Now audio data will receive through the callback.

import asyncio

import wave

import logging

import azure.cognitiveservices.speech as speechsdk

# Replace these with your Azure Speech Service credentials

SUBSCRIPTION_KEY = "YOUR_SUBSCRIPTION_KEY"

REGION = "YOUR_REGION"

# Global variables for audio properties

SAMPLE_WIDTH = 2 # 2 bytes per sample (16-bit audio)

FRAME_RATE = 16000 # 16 kHz sample rate

# Create a logger

logger = logging.getLogger("audio_logger")

logger.setLevel(logging.INFO)

handler = logging.StreamHandler()

formatter = logging.Formatter("%(asctime)s - %(levelname)s - %(message)s")

handler.setFormatter(formatter)

logger.addHandler(handler)

# Audio queue to hold audio data

audio_queue = asyncio.Queue()

async def send_audio(queue):

with wave.open("generated_audio.wav", "wb") as wav_file:

wav_file.setnchannels(1)

wav_file.setsampwidth(SAMPLE_WIDTH)

wav_file.setframerate(FRAME_RATE)

while True:

audio_data = await queue.get()

if audio_data is None:

# Break the loop when None is received to stop writing to the file.

break

logger.info('Writing audio chunk of length {}'.format(len(audio_data)))

# Write the audio data to the file.

wav_file.writeframes(audio_data)

async def synthesize_callback(evt: speechsdk.SpeechSynthesisEventArgs):

audio = evt.result.audio_data

logger.info('Audio chunk received of length {}, duration {}'.format(len(audio), evt.result.audio_duration))

audio_queue.put_nowait(audio)

async def main():

# Create an instance of the SpeechConfig with your subscription key and region

speech_config = speechsdk.SpeechConfig(subscription=SUBSCRIPTION_KEY, region=REGION)

# Create an instance of the SpeechSynthesizer with the SpeechConfig

synthesizer = speechsdk.SpeechSynthesizer(speech_config=speech_config)

# Connect the callback

synthesizer.synthesizing.connect(synthesize_callback)

# SSML text to be synthesized

ssml_text = "<speak version='1.0' xmlns='http://www.w3.org/2001/10/synthesis' xml:lang='en-US'> \

<voice name='en-US-JennyNeural'> \

Butta bomma, Butta bomma, nannu suttukuntiveyyy, Zindagi ke atta bommaiey. \

Janta kattu kuntiveyyy. \

</voice> \

</speak>"

# Create a task to run the send_audio() coroutine concurrently with the main() function.

audio_task = asyncio.create_task(send_audio(audio_queue))

# Start the synthesis process

result = synthesizer.speak_ssml_async(ssml_text).get()

# Signal the audio_queue to stop writing to the file

audio_queue.put_nowait(None)

# Wait for the send_audio() task to complete

await audio_task

if __name__ == "__main__":

asyncio.run(main())

Speech Synthesizer connects the synthesizing callback, and initiates the SSML text synthesis.

synthesize_callback() function will receive the audio chunks, and the send_audio() function streams the audio data to the WAV file.

The below statement will help you to determine if the issue lies in the audio data received or in the WAV file creation.

async def synthesize_callback(evt: speechsdk.SpeechSynthesisEventArgs):

audio = evt.result.audio_data

logger.info('Audio chunk received of length {}, duration {}'.format(len(audio), evt.result.audio_duration))

# Debug statement: Save the received audio to a file for inspection (optional)

with open("received_audio.wav", "wb") as f:

f.write(audio)

audio_queue.put_nowait(audio)

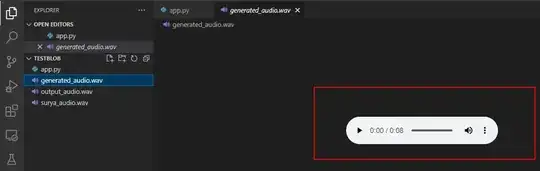

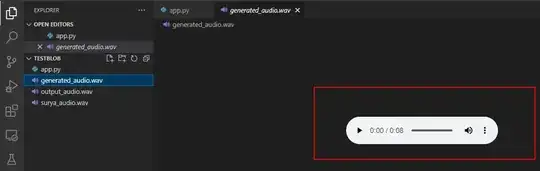

Check the wav file is generated in the same application directory.

Output: