I am playing around with divergence metrics, and I found that if I implement the calculations on my own or rely on the built-in libs, I get two different numbers. Now, I don't know what am (is) I (the built-in function) doing wrong.

For simple analysis, I came up with the following toy example in Python:

import numpy as np

import pandas as pd

#two arrays of "events"

p=np.array([1,2,2,3,5,4,2,3,2,3,4,2,1,1,1,2,2,3,5,4,2,3,2,3,4,2,1,1,1,2,2,3,5,4,2,3,1,1,2,3,4,2,1,1,1,2,2,2,2,1,2,2,3,5,4,2,2,1,2,2,3])

q=np.array([2,3,2,4,2,3,2,3,2,3,2,3,2,2,2,2,1,2,2,3,5,4,2,3,1,1,2,3,4,2,1,1,1,2,2,3,5,4,2,3,1,1,2,3,4,2,1,1,1,2,2,2,2,1,2,2,3,5,4])

I was told that since I will be comparing the two PDF and calculating divergence metrics for them, the "sample space" for each of them should be the same. Hence, I take all possible values taken by both p and q and use that to calculate the PDFs.

This is my simple function to calculate PDF for an array of data in an array of sample space:

def create_prob_dist(data: np.array, sample_space: np.array):

#number of all events

sum_of_events = sample_space.size

#get the counts of each event via pandas.crosstab()

data_counts = pd.crosstab(index='counts', columns=data)

#create probabilities for each event

prob_dist=dict()

for i in sample_space:

if i in data_counts:

prob_dist[i]=(data_counts[i]['counts'])/sum_of_events

else:

prob_dist[i]=0

return prob_dist

To calculate the PDFs with the function, I do these steps:

#get all possible discrete events from p and q

px=np.array(list(set(p))) #we use set here to remove duplicates

qx=np.array(list(set(q))) #we use set here to remove duplicates

#create all possible discrete events of both p and q

mx=np.concatenate([px,qx]) #concatenate first

mx=np.array(list(set(mx))) #remove duplicates

mx.sort() #then sort

#create true PDFs of p and q using mx

p_pdf=create_prob_dist(p, mx)

q_pdf=create_prob_dist(q, mx)

#get the probability values only from the dictionary

p_pdf=np.array(list(p_pdf.values()))

q_pdf=np.array(list(q_pdf.values()))

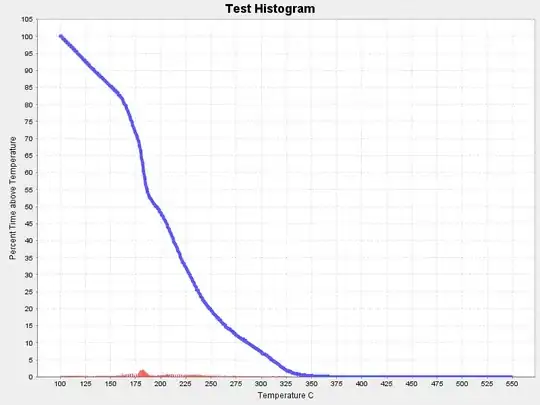

Then, I can plot the PDFs and the results are in line with my expectations:

plt.figure()

plt.plot(mx, q_pdf, 'g', label="Q")

plt.plot(mx, p_pdf, 'r', label="P")

plt.legend(loc="upper right")

plt.show()

So, once I have the PDFs and also view them, I have a sense of what I would expect from a divergence calculation. In fact, in this case, we should expect something much closer to 0 than 1.

KL divergence

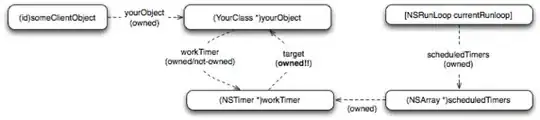

I followed the equation of KL divergence as this:

Accordingly, I created this simple function:

def KL_divergence(P:np.array, Q: np.array):

KL=0

for i,x in enumerate(P):

if ((Q[i] != 0) and (x != 0)): #avoid dividing with 0 and avoid having 0 in math.log()

KL += x * math.log(x / Q[i])

return KL

Note, in my case, P and Q are already prepared well (meaning they were calculated with the same sample space)

I compared my calculation with built-in functions:

from scipy.special import kl_div,rel_entr

print("KL divergence of p and q : {}".format(KL_divergence(p_pdf,q_pdf)))

kl_divergence=kl_div(p_pdf,q_pdf)

print("KL divergence (lib) of p and q : {}".format(sum(kl_divergence)))

print("KL divergence (lib2) of p and q: {}".format(sum(rel_entr(p_pdf, q_pdf))))

and I get the following output:

KL divergence of p and q : 0.4900499180923177

KL divergence (lib) of p and q : 0.09004991809231755

KL divergence (lib2) of p and q: 0.4900499180923177

The rel_entr() gives the same metric as mine, but the kl_div() gives something totally different.

What do you think? Which one is the right one and why?

JS divergence

Since JS divergence is a normalized/balanced version of KL divergence, I also calculated that and compared it to built-in functions.

I found two slightly different definitions. One is just doing a bi-directional KL divergence comparison and getting the average.

The other one, from Wikipedia, uses a mixture distribution as well described as

Accordingly, I have the following implementations for JS (using my KL functions)

#the simple JS

def JS_divergence(P:np.array, Q:np.array):

KL_P_Q=KL_divergence(P, Q)

KL_Q_P=KL_divergence(Q, P)

JS=(KL_P_Q+KL_Q_P)/2

return JS

# Wikipedia version

def mod_JS_divergence(P:np.array, Q:np.array):

#create M

M=(P+Q)/2 #sum the two distributions then get average

KL_P_Q=KL_divergence(P, M)

KL_Q_P=KL_divergence(Q, M)

JS=(KL_P_Q+KL_Q_P)/2

return JS

And this is the code to get the results, which include the use of the built-in function too.

from scipy.spatial.distance import jensenshannon

print("JS divergence of p and q : {}".format(JS_divergence(p_pdf,q_pdf)))

print("mod JS divergence of p and q : {}".format(mod_JS_divergence(p_pdf,q_pdf)))

js_divergence=jensenshannon(p_pdf,q_pdf)

print("JS divergence (lib) of p and q : {}".format(js_divergence))

output:

JS divergence of p and q : 0.08763662020764684

mod JS divergence of p and q : 0.021872274274735898

JS divergence (lib) of p and q : 0.041044079757403054

I am more concerned now about my JS divergence calculations as none of my functions return the same outcome as the built-in one.

My question is again the same: what am I doing wrong? what is the way the built-in function differs from my calculations? Do you guys have any idea?