I'm trying to set up a Kinesis Analytics application to write data from a Kinesis Stream to Keyspaces. To do this, I'm using flink's cassandra connector.

The application receives messages from a stream, groups them on some key, aggregates them over a 15-minute window, and writes the resulting messages to keyspaces.

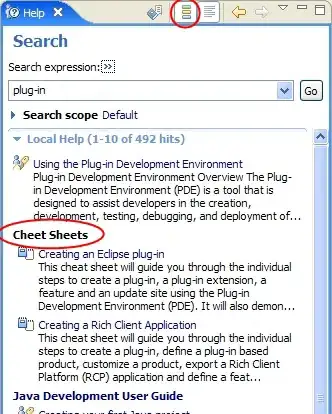

When testing the application on my local, I have no issues and everything flows into the database. When the application is deployed to Kinesis, everything runs fine until I hit the first database write (at some even multiple of 15 minutes into the hour). The first few writes to the database go through. Then, the CPU usage on the sink spikes to 100% and holds there until the application is killed. I've attached screenshots of the flame graphs.

My build.sbt is here:

ThisBuild / version := "0.1.0"

ThisBuild / scalaVersion := "2.12.17"

lazy val root = (project in file("."))

.settings(

name := "admin-kinesis",

idePackagePrefix := Some("my.package"),

mainClass := Some("my.package.Main")

)

val jarName = s"admin-kinesis.jar"

val flinkVersion = "1.15.2"

val kdaRuntimeVersion = "1.2.0"

ThisBuild / libraryDependencies ++= Seq(

"software.amazon.awssdk" % "bom" % "2.20.26",

"software.amazon.awssdk" % "secretsmanager" % "2.20.26",

"com.amazonaws" % "aws-kinesisanalytics-runtime" % kdaRuntimeVersion,

"org.apache.flink" % "flink-connector-kinesis" % flinkVersion,

"org.apache.flink" %% "flink-connector-cassandra" % flinkVersion,

"org.apache.flink" % "flink-streaming-java" % flinkVersion,

"org.apache.flink" %% "flink-scala" % flinkVersion,

"org.apache.flink" %% "flink-streaming-scala" % flinkVersion,

"org.apache.flink" % "flink-clients" % flinkVersion,

"org.apache.flink" % "flink-runtime-web" % flinkVersion,

"org.slf4j" % "slf4j-api" % "2.0.7",

"org.slf4j" % "slf4j-simple" % "2.0.7",

"com.typesafe.play" % "play-json_2.12" % "2.9.4",

"software.aws.mcs" % "aws-sigv4-auth-cassandra-java-driver-plugin" % "4.0.6",

"com.codahale.metrics" % "metrics-core" % "3.0.2",

"com.datastax.cassandra" % "cassandra-driver-extras" % "3.11.3",

"com.chuusai" %% "shapeless" % "2.3.10"

)

artifactName := { (_: ScalaVersion, _: ModuleID, _: Artifact) => jarName }

assembly / mainClass := Some("my.package.Main")

assembly / assemblyJarName := jarName

assembly / assemblyMergeStrategy := {

case PathList("META-INF", _*) => MergeStrategy.discard

case _ => MergeStrategy.first

}

assembly / assemblyShadeRules := Seq(

ShadeRule.rename(("com.google", "org.apache.flink.cassandra.shaded.com.google")).inLibrary("org.apache.flink" %% "flink-connector-cassandra" % flinkVersion)

)

and my Cassandra sink configuration is:

def addSink[T](forType: DataStream[T], query: String, applicationProperties: java.util.Map[String, Properties]): ConnectorCassandraSink[T] = {

ConnectorCassandraSink.addSink(forType)

.setMaxConcurrentRequests(100)

.setFailureHandler(new CassFailureHandler())

.setQuery(query)

.setClusterBuilder((builder: Cluster.Builder) => {

builder

.withCodecRegistry(

CodecRegistry.DEFAULT_INSTANCE

.register(InstantCodec.instance)

.register(LocalDateCodec.instance)

)

.addContactPoint(Constants.CassandraHost)

.withPort(9142)

.withSSL()

.withCredentials(

applicationProperties.get("ProducerConfigProperties").getProperty("keyspaces.user"),

applicationProperties.get("ProducerConfigProperties").getProperty("keyspaces.pass")

)

.withReconnectionPolicy(new ExponentialReconnectionPolicy(1 * 1000, 60 * 1000))

.withRetryPolicy(new LoggingRetryPolicy(DefaultRetryPolicy.INSTANCE))

.withLoadBalancingPolicy(

DCAwareRoundRobinPolicy

.builder()

.withLocalDc("us-west-2")

.build()

).withQueryOptions(

new QueryOptions()

.setConsistencyLevel(ConsistencyLevel.LOCAL_QUORUM)

.setPrepareOnAllHosts(true)

).build()

})

.setMapperOptions(() => Array(Mapper.Option.saveNullFields(true)))

.build()

}

Has anyone experienced a similar issue with Kinesis Analytics applications/know where I should start debugging?

Thanks!