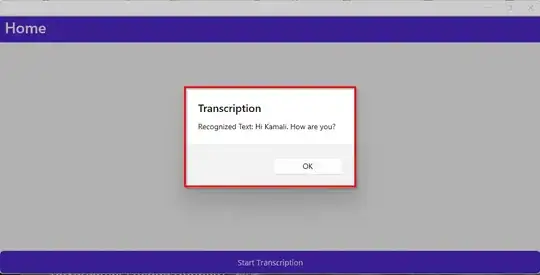

I am trying to build simple speech to text android application using .Net MAUI but always getting result as - Microsoft.CognitiveServices.Speech.ResultReason.NoMatch.

Same code if I tried using console application and it is working as expected and is returning the spoken text in the result.

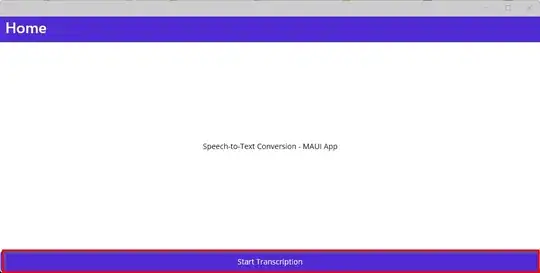

Below is the code which I am using in MAUI -

async void TranscribeClicked(object sender, EventArgs e) {

bool isMicEnabled = await micService.GetPermissionAsync();

var audioConfig = AudioConfig.FromDefaultMicrophoneInput();

if (!isMicEnabled) {

UpdateTranscription("Please grant access to the microphone!");

return;

}

var config = SpeechConfig.FromSubscription("SubscriptionKey", "ServiceRegion");

config.SpeechSynthesisVoiceName = "en-US-AriaNeural";

using(var recognizer = new SpeechRecognizer(config, autoDetectSourceLanguageConfig)) {

var result = await recognizer.RecognizeOnceAsync().ConfigureAwait(false);

if (result.Reason == ResultReason.RecognizedSpeech) {

var lidResult = AutoDetectSourceLanguageResult.FromResult(result);

UpdateTranscription(result.Text);

} else if (result.Reason == ResultReason.NoMatch) {

UpdateTranscription("NOMATCH: Speech could not be recognized.");

}

}

}

Not sure what is missing as same code works in Console Application.