I'm dealing with a relatively "large" dataset (csv, ~16M of unique id's,8 features etc..).

db_dual = './file.csv', blocksize='64MB' ,sep=',',decimal=".", low_memory=True, usecols=include, dtype={"id": "string","cc": "string","pd": "string","cs": "string","coms": "string","cap": "string","in": "string",'ncn': "string","coms": "string"})

I want to group by the data set and apply this function for each group

def vDual(df):

# FD

if len(df) == 1 and (df['cc'].eq('DUAL')).any():

df['FD'] = 1

# VD

if len(df) == 2 and (df['cs'].eq('E')).any() and (df['cs'].eq('G')).any():

df['VD'] = 1

return df

In dask I'm using:

db_dual = db_dual.groupby(['cf','coms','cap','in','ncn']).apply(vDual, meta={'FD': 'int', 'VD': 'int'})

db_dual.compute()

The problem is that the command is consuming a lot of ram with conseguent saturation and crash of the script.

I'm running the script in a Google Colab

any idea? What am I doing wrong?

I

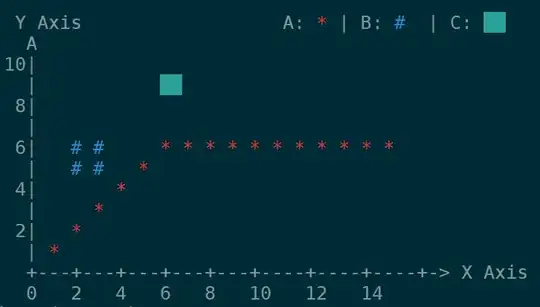

Input Data

Expected Output:

PS) The approach works perfectly with a subset of data, but it does not scale with the full dataset.

Thanks