Because it is probably doing nothing.

I get the same timings, for numba, for this function

def test3(x):

pass

Note that test does almost nothing neither. Those are just slices, without any actions associated to it. So, no data transfer or anything.

Just the creation of 3 variables, and some boundaries tweaks.

If the code was about an array of 5000000 elements, and slices of 1000000 elements from it, it wouldn't be slower. Hence the reason, I suppose, for which, when you wanted to scale a bit things on "bigger" case, you decided not to increase data size (because you probably knew that data size was not relevant here), but to increase the number of lines.

But, well, test, even doing almost nothing, is still doing these 3, then unused, slices.

Where as numba compiles some generated C code. And compiler, with optimizer, has no reason to keeps those slice variables that are never used afterward.

I totally speculate here (I've never seen numba's generated code). But I imagine code could look line

void test2(double *x, int xstride, int xn){

double *k = x+1*xstride;

int kstride=xstride;

int kn=1;

double *l=x;

int lstride=xstride;

int ln=3;

double *m=x;

int mstride=xstride;

int mn=1;

// And then it would be possible, from there to iterates those slices

// for example k[:]=m[:] could generate this code

// for(int i=0; i<kn; i++) k[i*stride] = m[i*stride]

}

(Here I use stride the size in double * arithmetics, when in reality strides is in bytes, but it doesn't matter, that is just pseudo code)

My point is, if there was something afterward (like what I put in comment), then, this code, even if it is just a few arithmetics operations, would still be "almost nothing, but not nothing".

But there is nothing afterward. So, it is just some local variable initialization, with code with clearly no side effect. It is very easy for the compiler optimizer to just drop all that code. And compile an empty function, which has exactly the same effect and result.

So, again, just speculation on my behalf. But any decent code generator+compiler should just compile an empty function for test2. So test2 and test3 are the same things.

While an interpreter does not, usually this kind of optimization (first, it is harder to know in advance what is coming, and second, time spent to optimize is at runtime, so there is a tradeoff, when, for a compiler, even if it takes 1 hour of compile time to spare 1ns of runtime, it still worth it)

Edit: some more experiments

The idea that jared and I both had, that is doing something, whatever it is, to force the slices to exist, and compare what happens with numba when it has to do something, and therefore to really do the slices, is natual. The problem is that as soon as you start doing something, anything, the the timing of the slices themselves become negligible. Because slicing is nothing.

But, well, statistically you can remove that and still measure somehow the "slicing" part.

Here are some timings data

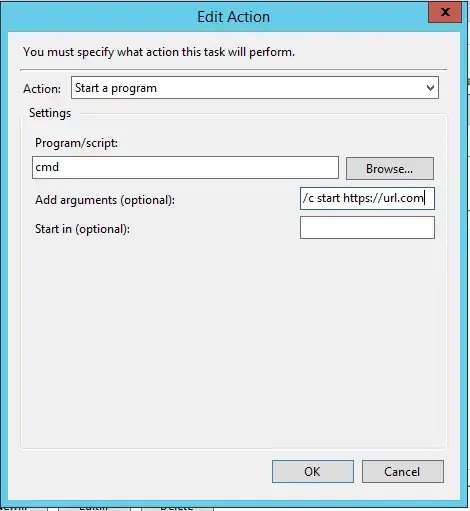

Empty function

On my computer, an empty function cost 130 ns in pure python. And 540 ns with numba.

Which is not surprising. Doing nothing, but doing so while crossing the "python/C frontier" probably cost a bit, just for that "python/C".

Not much tho

Time vs number of slices

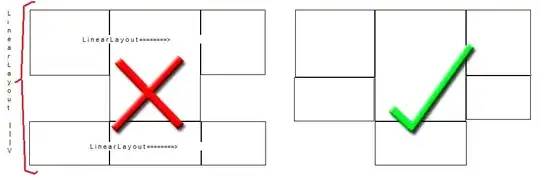

Next experiment is the exact one you made (since, btw, your post contain its own proof of my answer: you already saw that in pure python time is O(n), n being the number of slices, when in numba it is O(1). That alone proves that there is no slicing at all occurring. If the slicing were done, in numba as in any other non-quantum computer :D, cost has to be O(n). Now, of course if it t=100+0.000001*n, it might be hard to distinguish O(n) from O(1). Hence the reason why I started by evaluating the "empty" case

In pure python, slicing only, with increasing number of slices in obviously O(n), indeed:

A linear regression tells that this is roughly 138+n×274, in ns.

Which is consistent with "empty" time

For numba, on the other hand, we get

So no need for a linear regression to prove that

- It is indeed O(1)

- Timing is consistent with the 540 ns of "empty" case

Note that this means that, for n=2 or more slices, on my computer, numba becomes competitive. Before, it is not.

But, well, competition in doing "nothing"...

With usage of slices

If we add code afterward to force the usage of the slices, of course, things change. Compiler can't just remove slices.

But we have to be careful

- To avoid having a O(n) addition in the operation itself

- To distinguish the timing of the operation from the, probably negligible timing of the slicing

What I did then, is computing some addition slice1[1]+slice2[2]+slice3[3]+...

But what ever the number of slices, I have 1000 terms in this addition. So for n=2 (2 slices), that addition is slice1[1]+slice2[2]+slice1[3]+slice2[4]+... with 1000 terms.

That should help remove the O(n) part due to that addition. And then, with big enough data, we can extract some value from the variations around this, even tho the variations are quite negligible before the addition time itself (and therefore even before the noise of that addition time. But with enough measurement, that noise become low enough to start seeing things)

In pure python

A linear regressions gives 199000 + 279×n ns

What we learn from this, is that my experimental setup is ok. 279 is close enough to the previous 274 to say that, indeed, the addition part, as huge as it is (200000 ns) is O(1), since the O(n) part remained unchanged compared to slicing only. So we just have the same timing as before + a huge constant for the addition part.

With numba

All that was just the preamble to justify the experimental setup. Now come the interesting part, the experiment itself

Linear regression tells 1147 + 1.3×n

So, here, it is indeed O(n).

Conclusion

Slicing in numba does cost something. It is O(n).

But without usage of it, the compiler just remove it, and we get a O(1) operation.

Proof that the reason was, indeed, that in your version, numba code is simply doing nothing

2.

Cost of the operation, whatever it is, that you do with the slice to force it to be used, and to prevent the compiler to just remove it, is way bigger, which, without statistical precautions, mask the O(n) part. Hence the feeling that "it is the same when we use the variable".

3.

Anyway, numba is faster than numpy most of the time.

I mean, numpy is a good way to have "compiled language speed", without using compiled language. But it does not beat real compilation.

So, it is quite classical to have a naive algorithm in numba beating a very smart vectorization in numpy. (classical, and very disappointing for someone like me, who made a living in being the guy who knowns who to vectorize things in numpy. Sometimes, I feel that with numba, the most naive nested for loops are better).

It stops being so, tho, when

- Numpy make usage of several cores (you can do that with numba too. But not just with naive algorithms)

- You are doing operations for which a very smart algorithm exist. Numpy algorithm have decades of optimizations. Can't beat that with 3 nested loops. Except that some tasks are so simple that they can't really be optimized.

So, I still prefer numpy over numba. Prefer to use decades of optimizations behind numpy that reinvent the wheel in numba. Plus, sometimes it is preferable not to rely on a compiler.

But, well, it is classical to have numba beating numpy.

Just, not with the ratios of your case. Because in your case, you were comparing (as I think I've proven now, and as you'd proven yourself, by seeing that numpy case was O(n) when numba case was O(1)), "doing slices with numpy vs doing nothing with numba"