I have a for loop running in databricks and the first iterations run fast, then it gets slower and then it doesn't proceeds at all. While I know that is common in for loops if data size is increasing on each iteration and/or there's garbage variables not being deleted, at least I should see that either RAM/CPU/DISK/NETWORK are nearing 100%, right? but they are not, in fact they are not used at all. And also, at least I hoped to see spark Jobs being processed at SparkUI but there are none when the for loop gets stuck. Despite the notebook cell says the cell is still running.

So do I have some resource that got clugged that is neither RAM/CPU/DISK/NETWORK? And why spark shows no running jobs despite the for is still running?

Also, spark UI shows there's no job running, despite the notebook says the command is still running, and I know for sure it hasn't finished because I should see files getting created on AWS S3.

My case is that I am trying to generate some dummy data for testing. My goal is to create many csv files of the same dataframe schema that vary just in a datestamp column, so to emulate incremental load scenario. My goal is to have 400 csv that are essentially the same dataframe but with the datestamp column changing by 1 day, as if I received a file on Jan 01, another on Jan 02, another in Jan 03 and so on for 400 days.

For that, I have my "base" dataframe input_df, and I have a for loop in databricks that reads from it, increases the id column and datestamp column (as if they were new rows) and writes into S3 with a datestamp string. Here is the loop

another=input_df

for i in range(400):

# I get the min and max values of column "id" to then add the ids for the new df

aggs=another.agg(max('id'),min('id'))

max_id=aggs.collect()[0][0]

min_id=aggs.collect()[0][1]

# Here I add the id column to emulate "new data" and the datestamp column

another=another.withColumn('id',col('id')+max_id-min_id+1).\

withColumn('created_time',date_add(col('created_time'),1)).\

withColumn('created_time',date_format(col("created_time"), "yyyy-MM-dd'T'HH:mm:ss.SSSZ"))

#here I create the file name by using the datestamp

date_procesed=datetime.strptime('20220112','%Y%m%d') + timedelta(days=i+1)

date_procesed=date_procesed.strftime('%Y%m%d')

print(date_procesed)

#And here I write it in a single csv file

another.coalesce(1).write.option('header','true').csv('dbfs:/tmp/wiki/transaction/'+date_procesed)

Now the cell of the notebook runs for about 11 files (that created and completed about 40 jobs) and then stops. I thought some resource was nearing capacity. But this is my problem

- At Spark UI no job is running. As if the notebook is not even creating more jobs

- All the first 40 jobs created my the first 11 iterations of the loop are completed (and I see the files written on S3)

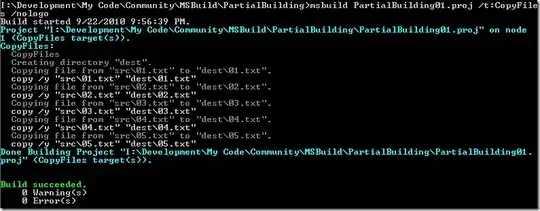

- At GangliaUI I see only the driver is doing everything (which is expected cause my input_df was created using the

randlibrary. But neither its CPU/RAM/NETWORK/DISK are full, and they are not even fluctuating for nearly an hour

here's a picture of the driver at ganglia. you can see the resources (CPU,RAM,NETWORK) kind of do spike at some point in time (when the for loop was actually working, creating and completing spark jobs). But then they downsized and are stable, but the for loop stops and I know it shouldn't .

My ultimate questions are

- Do I have some resource that got clugged that is neither RAM/CPU/DISK/NETWORK?

- And why spark shows no running jobs despite the for is still running? Why this for loop

- doesn't works? I know they are not good practice in python, let alone in spark. But I have no answer why it's not processing

Also as a side note, I noticed this on processes running on the driver (despite there's no spar job running) So I suspect spark is not closing some ports/connections or declaring processes as done