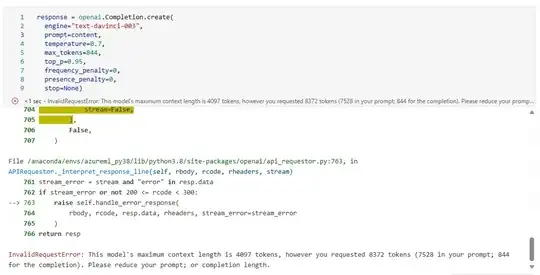

I am calling open AI api with below details and I am getting below response

{'value': {'outputs': [{'finishReason': 'LENGTH', 'text': '\n\nThe summary in this JSON format is as follows:\n\nshort_', 'generationTimestamp': 1689303489, 'trackingId': "Ê.\x00Õ!07´a'"}], 'modelId': 'TEXT_ADA_001'}}

My parameters:

{

"model_max_tokens": 1024,

"model_id": "TEXT_DAVINCI_003",

"model_temperature": 0

}

Prompt text length is 5903

Response is not complete message and finishReason is stop, How can Handle this and get desired results ?